Полный список

Раньше мы для View-компонентов использовали OnClickListener и ловили короткие нажатия. Теперь попробуем ловить касания и перемещения пальца по компоненту. Они состоят из трех типов событий:

— нажатие (палец прикоснулся к экрану)

— движение (палец движется по экрану)

— отпускание (палец оторвался от экрана)

Все эти события мы сможем ловить в обработчике OnTouchListener, который присвоим для View-компонента. Этот обработчик дает нам объект MotionEvent, из которого мы извлекаем тип события и координаты.

На этом уроке рассмотрим только одиночные касания. А мультитач – на следующем уроке.

Project name: P1021_Touch

Build Target: Android 2.3.3

Application name: Touch

Package name: ru.startandroid.develop.p1021touch

Create Activity: MainActivity

strings.xml и main.xml нам не понадобятся, их не трогаем.

MainActivity реализует интерфейс OnTouchListener для того, чтобы выступить обработчиком касаний.

В onCreate мы создаем новый TextView, сообщаем ему, что обработчиком касаний будет Activity, и помещаем на экран.

Интерфейс OnTouchListener предполагает, что Activity реализует его метод onTouch. На вход методу идет View для которого было событие касания и объект MotionEvent с информацией о событии.

Методы getX и getY дают нам X и Y координаты касания. Метод getAction дает тип события касания:

ACTION_DOWN – нажатие

ACTION_MOVE – движение

ACTION_UP – отпускание

ACTION_CANCEL – практически никогда не случается. Насколько я понял, возникает в случае каких-либо внутренних сбоев, и следует трактовать это как ACTION_UP.

В случае ACTION_DOWN мы пишем в sDown координаты нажатия.

В случае ACTION_MOVE пишем в sMove координаты точки текущего положения пальца. Если мы будем перемещать палец по экрану – этот текст будет постоянно меняться.

В случае ACTION_UP или ACTION_CANCEL пишем в sUp координаты точки, в которой отпустили палец.

Все это в конце события выводим в TextView. И возвращаем true – мы сами обработали событие.

Теперь мы будем водить пальцем по экрану (курсором по эмулятору) в приложении, и на экране увидим координаты начала движения, текущие координаты и координаты окончания движения.

Все сохраним и запустим приложение.

Ставим палец (курсор) на экран

Если вчерашний вечер не удался, голова не болит, рука тверда и не дрожит :), то появились координаты нажатия.

Если же рука дрогнула, то появится еще и координаты перемещения.

Продолжаем перемещать палец и видим, как меняются координаты Move.

Теперь отрываем палец от экрана и видим координаты точки, в которой это произошло

В целом все несложно. При мультитаче процесс немного усложнится, там уже будем отслеживать до 10 касаний.

Если вы уже знакомы с техникой рисования в Android, то вполне можете создать приложение выводящее на экран геометрическую фигуру, которую можно пальцем перемещать. Простейший пример реализации можно посмотреть тут: http://forum.startandroid.ru/viewtopic.php?f=28&t=535.

На следующем уроке:

— обрабатываем множественные касания

Присоединяйтесь к нам в Telegram:

— в канале StartAndroid публикуются ссылки на новые статьи с сайта startandroid.ru и интересные материалы с хабра, medium.com и т.п.

— в чатах решаем возникающие вопросы и проблемы по различным темам: Android, Kotlin, RxJava, Dagger, Тестирование

— ну и если просто хочется поговорить с коллегами по разработке, то есть чат Флудильня

— новый чат Performance для обсуждения проблем производительности и для ваших пожеланий по содержанию курса по этой теме

Источник

Touch in Android

Much like iOS, Android creates an object that holds data about the user’s physical interaction with the screen – an Android.View.MotionEvent object. This object holds data such as what action is performed, where the touch took place, how much pressure was applied, etc. A MotionEvent object breaks down the movement into to the following values:

An action code that describes the type of motion, such as the initial touch, the touch moving across the screen, or the touch ending.

A set of axis values that describe the position of the MotionEvent and other movement properties such as where the touch is taking place, when the touch took place, and how much pressure was used. The axis values may be different depending on the device, so the previous list does not describe all axis values.

The MotionEvent object will be passed to an appropriate method in an application. There are three ways for a Xamarin.Android application to respond to a touch event:

Assign an event handler to View.Touch — The Android.Views.View class has an EventHandler which applications can assign a handler to. This is typical .NET behavior.

Implementing View.IOnTouchListener — Instances of this interface may be assigned to a view object using the View. SetOnListener method.This is functionally equivalent to assigning an event handler to the View.Touch event. If there is some common or shared logic that many different views may need when they are touched, it will be more efficient to create a class and implement this method than to assign each view its own event handler.

Override View.OnTouchEvent — All views in Android subclass Android.Views.View . When a View is touched, Android will call the OnTouchEvent and pass it a MotionEvent object as a parameter.

Not all Android devices support touch screens.

Adding the following tag to your manifest file causes Google Play to only display your app to those devices that are touch enabled:

Gestures

A gesture is a hand-drawn shape on the touch screen. A gesture can have one or more strokes to it, each stroke consisting of a sequence of points created by a different point of contact with the screen. Android can support many different types of gestures, from a simple fling across the screen to complex gestures that involve multi-touch.

Android provides the Android.Gestures namespace specifically for managing and responding to gestures. At the heart of all gestures is a special class called Android.Gestures.GestureDetector . As the name implies, this class will listen for gestures and events based on MotionEvents supplied by the operating system.

To implement a gesture detector, an Activity must instantiate a GestureDetector class and provide an instance of IOnGestureListener , as illustrated by the following code snippet:

An Activity must also implement the OnTouchEvent and pass the MotionEvent to the gesture detector. The following code snippet shows an example of this:

When an instance of GestureDetector identifies a gesture of interest, it will notify the activity or application either by raising an event or through a callback provided by GestureDetector.IOnGestureListener . This interface provides six methods for the various gestures:

OnDown — Called when a tap occurs but is not released.

OnFling — Called when a fling occurs and provides data on the start and end touch that triggered the event.

OnLongPress — Called when a long press occurs.

OnScroll — Called when a scroll event occurs.

OnShowPress — Called after an OnDown has occurred and a move or up event has not been performed.

OnSingleTapUp — Called when a single tap occurs.

In many cases applications may only be interested in a subset of gestures. In this case, applications should extend the class GestureDetector.SimpleOnGestureListener and override the methods that correspond to the events that they are interested in.

Custom Gestures

Gestures are a great way for users to interact with an application. The APIs we have seen so far would suffice for simple gestures, but might prove a bit onerous for more complicated gestures. To help with more complicated gestures, Android provides another set of API’s in the Android.Gestures namespace that will ease some of the burden associated with custom gestures.

Creating Custom Gestures

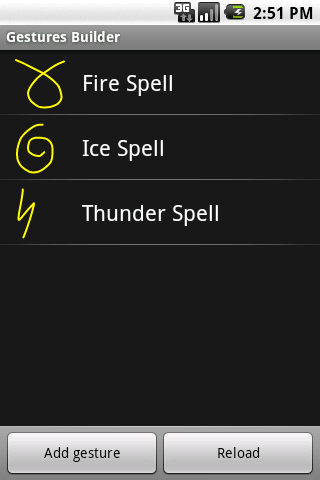

Since Android 1.6, the Android SDK comes with an application pre-installed on the emulator called Gestures Builder. This application allows a developer to create pre-defined gestures that can be embedded in an application. The following screen shot shows an example of Gestures Builder:

An improved version of this application called Gesture Tool can be found Google Play. Gesture Tool is very much like Gestures Builder except that it allows you to test gestures after they have been created. This next screenshot shows Gestures Builder:

Gesture Tool is a bit more useful for creating custom gestures as it allows the gestures to be tested as they are being created and is easily available through Google Play.

Gesture Tool allows you create a gesture by drawing on the screen and assigning a name. After the gestures are created they are saved in a binary file on the SD card of your device. This file needs to be retrieved from the device, and then packaged with an application in the folder /Resources/raw. This file can be retrieved from the emulator using the Android Debug Bridge. The following example shows copying the file from a Galaxy Nexus to the Resource directory of an application:

Once you have retrieved the file it must be packaged with your application inside the directory /Resources/raw. The easiest way to use this gesture file is to load the file into a GestureLibrary, as shown in the following snippet:

Using Custom Gestures

To recognize custom gestures in an Activity, it must have an Android.Gesture.GestureOverlay object added to its layout. The following code snippet shows how to programmatically add a GestureOverlayView to an Activity:

The following XML snippet shows how to add a GestureOverlayView declaratively:

The GestureOverlayView has several events that will be raised during the process of drawing a gesture. The most interesting event is GesturePerformed . This event is raised when the user has completed drawing their gesture.

When this event is raised, the Activity asks a GestureLibrary to try and match the gesture that the user with one of the gestures created by Gesture Tool. GestureLibrary will return a list of Prediction objects.

Each Prediction object holds a score and name of one of the gestures in the GestureLibrary . The higher the score, the more likely the gesture named in the Prediction matches the gesture drawn by the user. Generally speaking, scores lower than 1.0 are considered poor matches.

The following code shows an example of matching a gesture:

Источник

Walkthrough — Using Touch in Android

Let us see how to use the concepts from the previous section in a working application. We will create an application with four activities. The first activity will be a menu or a switchboard that will launch the other activities to demonstrate the various APIs. The following screenshot shows the main activity:

The first Activity, Touch Sample, will show how to use event handlers for touching the Views. The Gesture Recognizer activity will demonstrate how to subclass Android.View.Views and handle events as well as show how to handle pinch gestures. The third and final activity, Custom Gesture, will show how use custom gestures. To make things easier to follow and absorb, we’ll break this walkthrough up into sections, with each section focusing on one of the Activities.

Touch Sample Activity

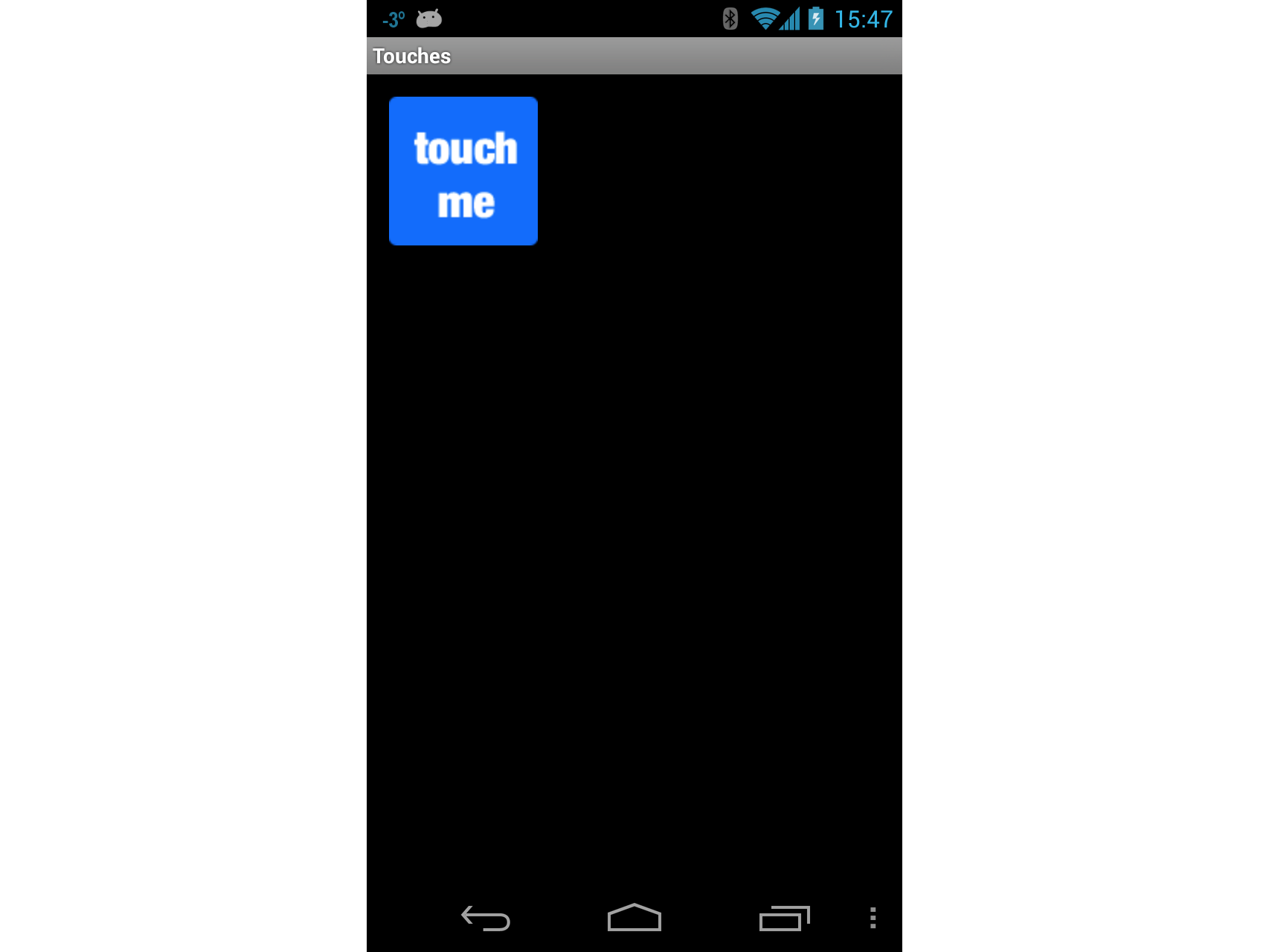

Open the project TouchWalkthrough_Start. The MainActivity is all set to go – it is up to us to implement the touch behaviour in the activity. If you run the application and click Touch Sample, the following activity should start up:

Now that we have confirmed that the Activity starts up, open the file TouchActivity.cs and add a handler for the Touch event of the ImageView :

Next, add the following method to TouchActivity.cs:

Notice in the code above that we treat the Move and Down action as the same. This is because even though the user may not lift their finger off the ImageView , it may move around or the pressure exerted by the user may change. These types of changes will generate a Move action.

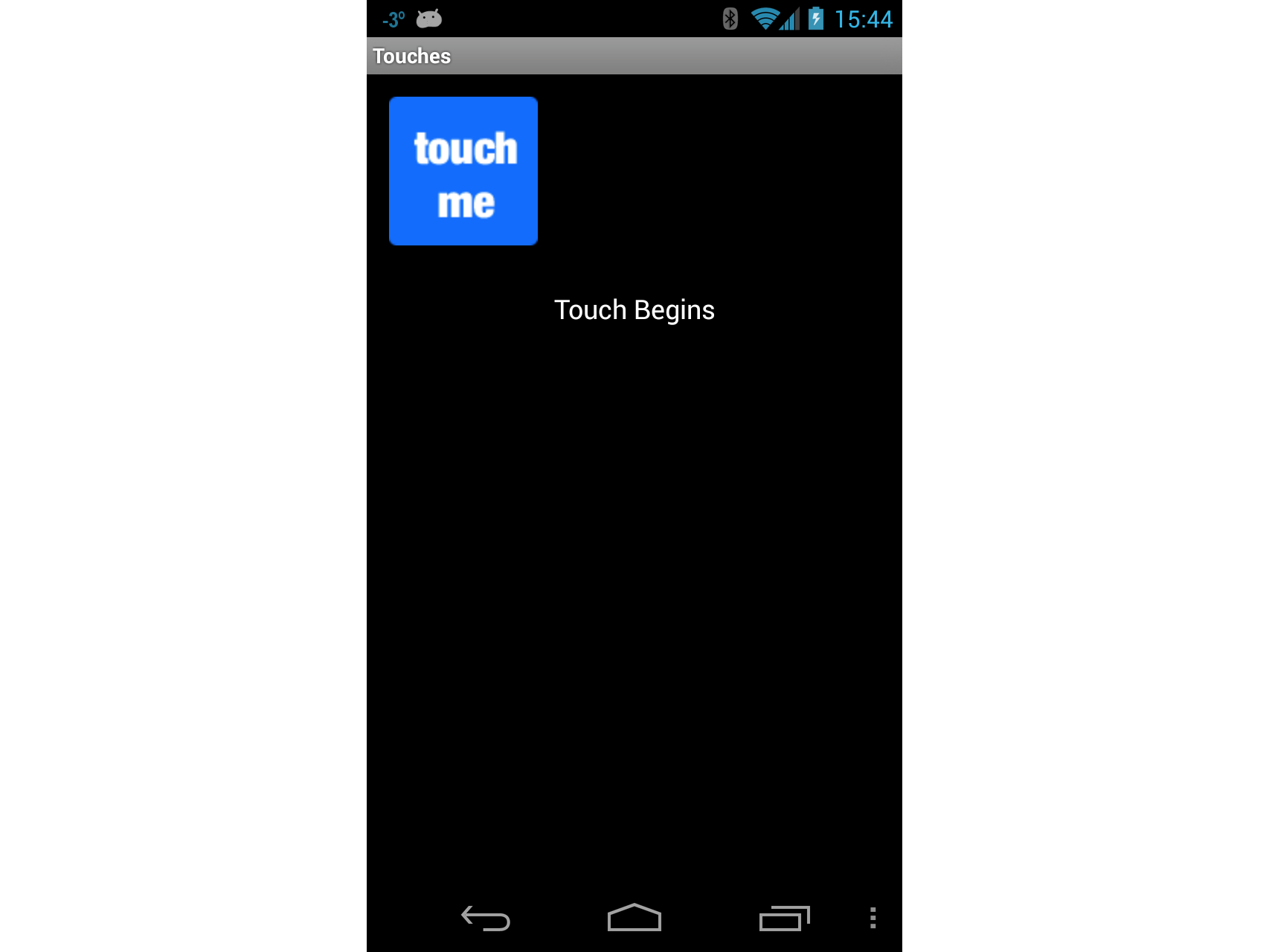

Each time the user touches the ImageView , the Touch event will be raised and our handler will display the message Touch Begins on the screen, as shown in the following screenshot:

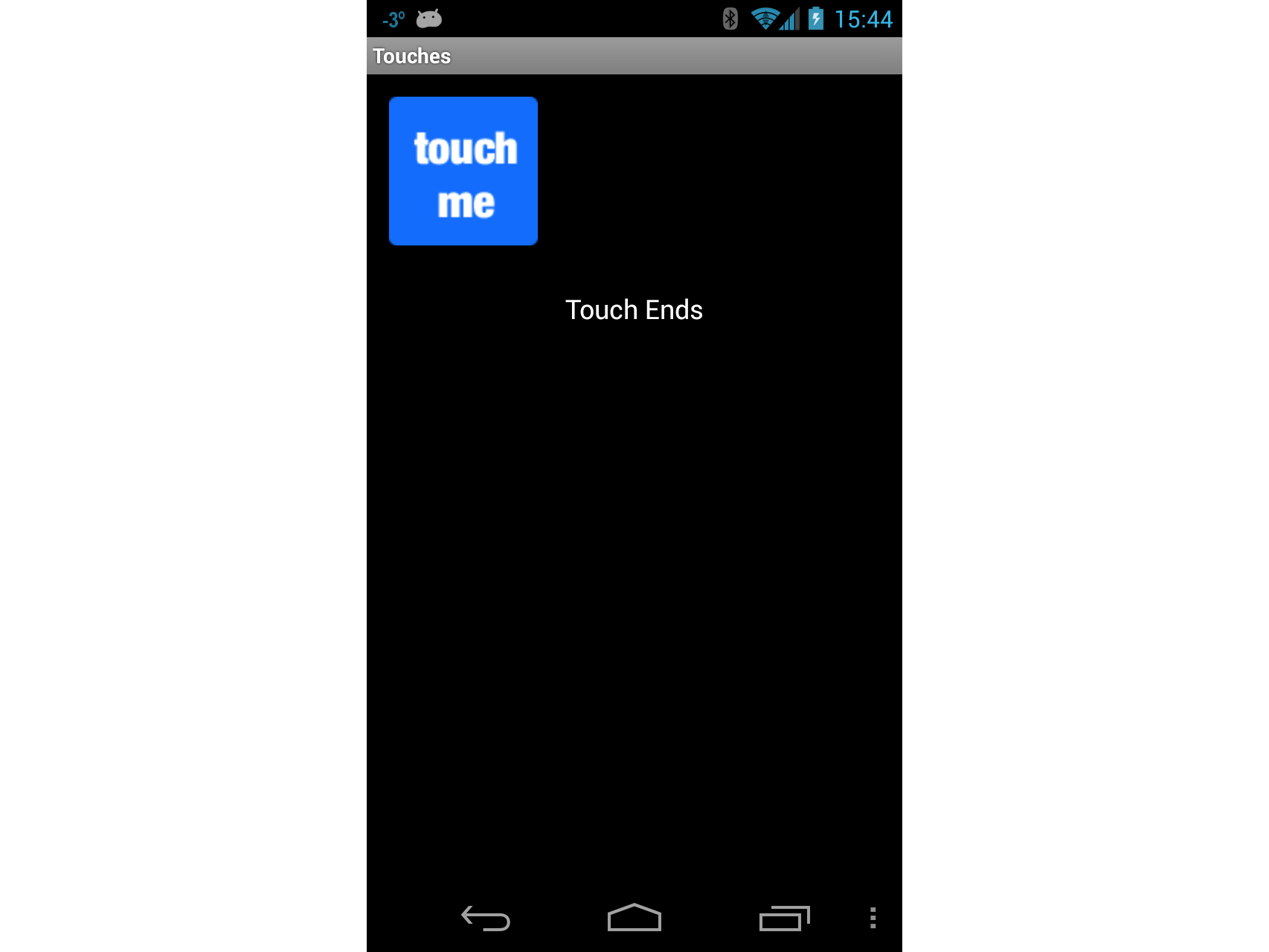

As long as the user is touching the ImageView , Touch Begins will be displayed in the TextView . When the user is no longer touching the ImageView , the message Touch Ends will be displayed in the TextView , as shown in the following screenshot:

Gesture Recognizer Activity

Now lets implement the Gesture Recognizer activity. This activity will demonstrate how to drag a view around the screen and illustrate one way to implement pinch-to-zoom.

Add a new Activity to the application called GestureRecognizer . Edit the code for this activity so that it resembles the following code:

Add a new Android view to the project, and name it GestureRecognizerView . Add the following variables to this class:

Add the following constructor to GestureRecognizerView . This constructor will add an ImageView to our activity. At this point the code still will not compile – we need to create the class MyScaleListener that will help with resizing the ImageView when the user pinches it:

To draw the image on our activity, we need to override the OnDraw method of the View class as shown in the following snippet. This code will move the ImageView to the position specified by _posX and _posY as well as resize the image according to the scaling factor:

Next we need to update the instance variable _scaleFactor as the user pinches the ImageView . We will add a class called MyScaleListener . This class will listen for the scale events that will be raised by Android when the user pinches the ImageView . Add the following inner class to GestureRecognizerView . This class is a ScaleGesture.SimpleOnScaleGestureListener . This class is a convenience class that listeners can subclass when you are interested in a subset of gestures:

The next method we need to override in GestureRecognizerView is OnTouchEvent . The following code lists the full implementation of this method. There is a lot of code here, so lets take a minute and look what is going on here. The first thing this method does is scale the icon if necessary – this is handled by calling _scaleDetector.OnTouchEvent . Next we try to figure out what action called this method:

If the user touched the screen with, we record the X and Y positions and the ID of the first pointer that touched the screen.

If the user moved their touch on the screen, then we figure out how far the user moved the pointer.

If the user has lifted his finger off the screen, then we will stop tracking the gestures.

Now run the application, and start the Gesture Recognizer activity. When it starts the screen should look something like the screenshot below:

Now touch the icon, and drag it around the screen. Try the pinch-to-zoom gesture. At some point your screen may look something like the following screen shot:

At this point you should give yourself a pat on the back: you have just implemented pinch-to-zoom in an Android application! Take a quick break and lets move on to the third and final Activity in this walkthrough – using custom gestures.

Custom Gesture Activity

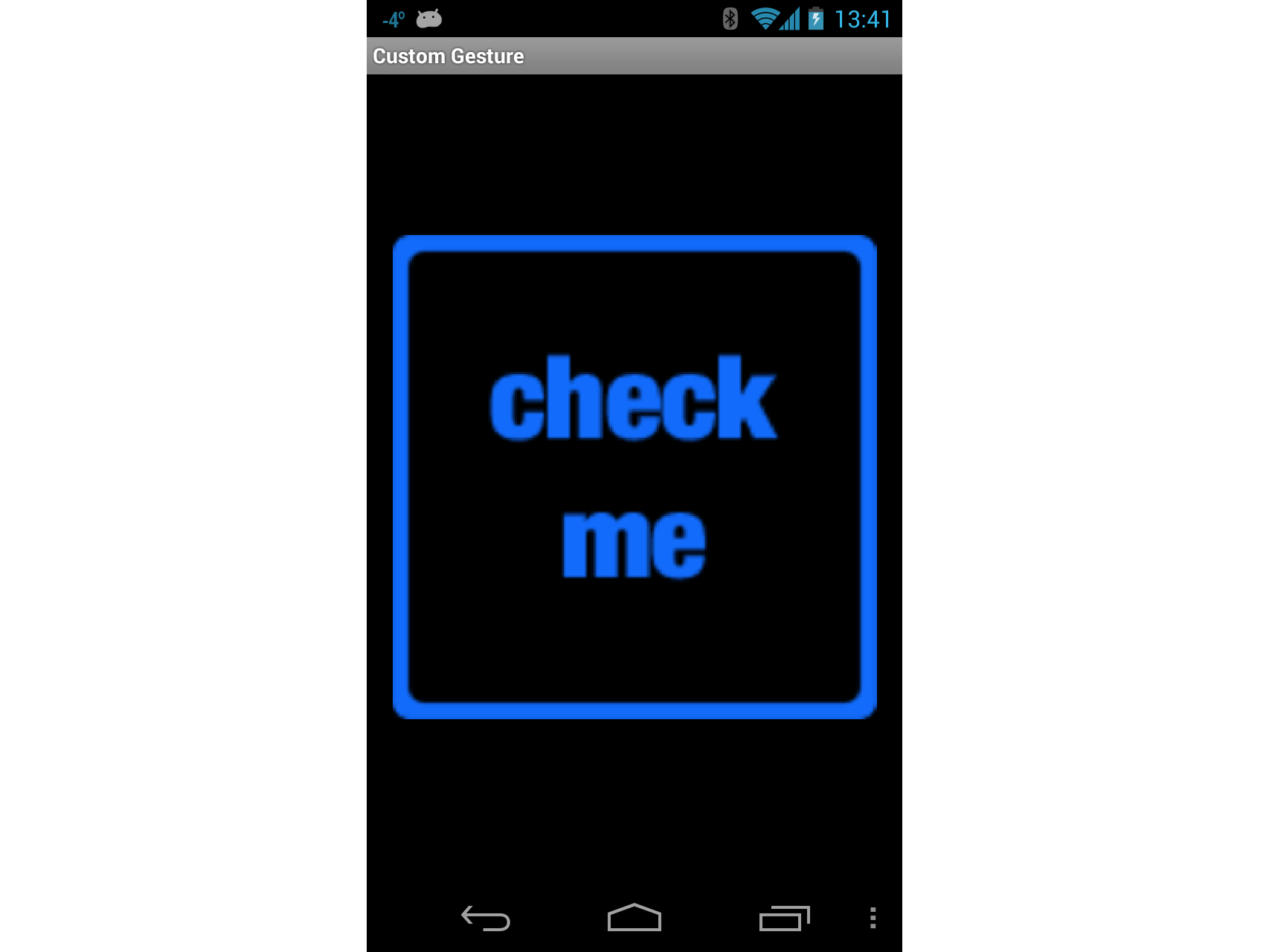

The final screen in this walkthrough will use custom gestures.

For the purposes of this Walkthrough, the gestures library has already been created using Gesture Tool and added to the project in the file Resources/raw/gestures. With this bit of housekeeping out of the way, lets get on with the final Activity in the walkthrough.

Add a layout file named custom_gesture_layout.axml to the project with the following contents. The project already has all the images in the Resources folder:

Next add a new Activity to the project and name it CustomGestureRecognizerActivity.cs . Add two instance variables to the class, as showing in the following two lines of code:

Edit the OnCreate method of the this Activity so that it resembles the following code. Lets take a minute to explain what is going on in this code. The first thing we do is instantiate a GestureOverlayView and set that as the root view of the Activity. We also assign an event handler to the GesturePerformed event of GestureOverlayView . Next we inflate the layout file that was created earlier, and add that as a child view of the GestureOverlayView . The final step is to initialize the variable _gestureLibrary and load the gestures file from the application resources. If the gestures file cannot be loaded for some reason, there is not much this Activity can do, so it is shutdown:

The final thing we need to do implement the method GestureOverlayViewOnGesturePerformed as shown in the following code snippet. When the GestureOverlayView detects a gesture, it calls back to this method. The first thing we try to get an IList

Run the application and start up the Custom Gesture Recognizer activity. It should look something like the following screenshot:

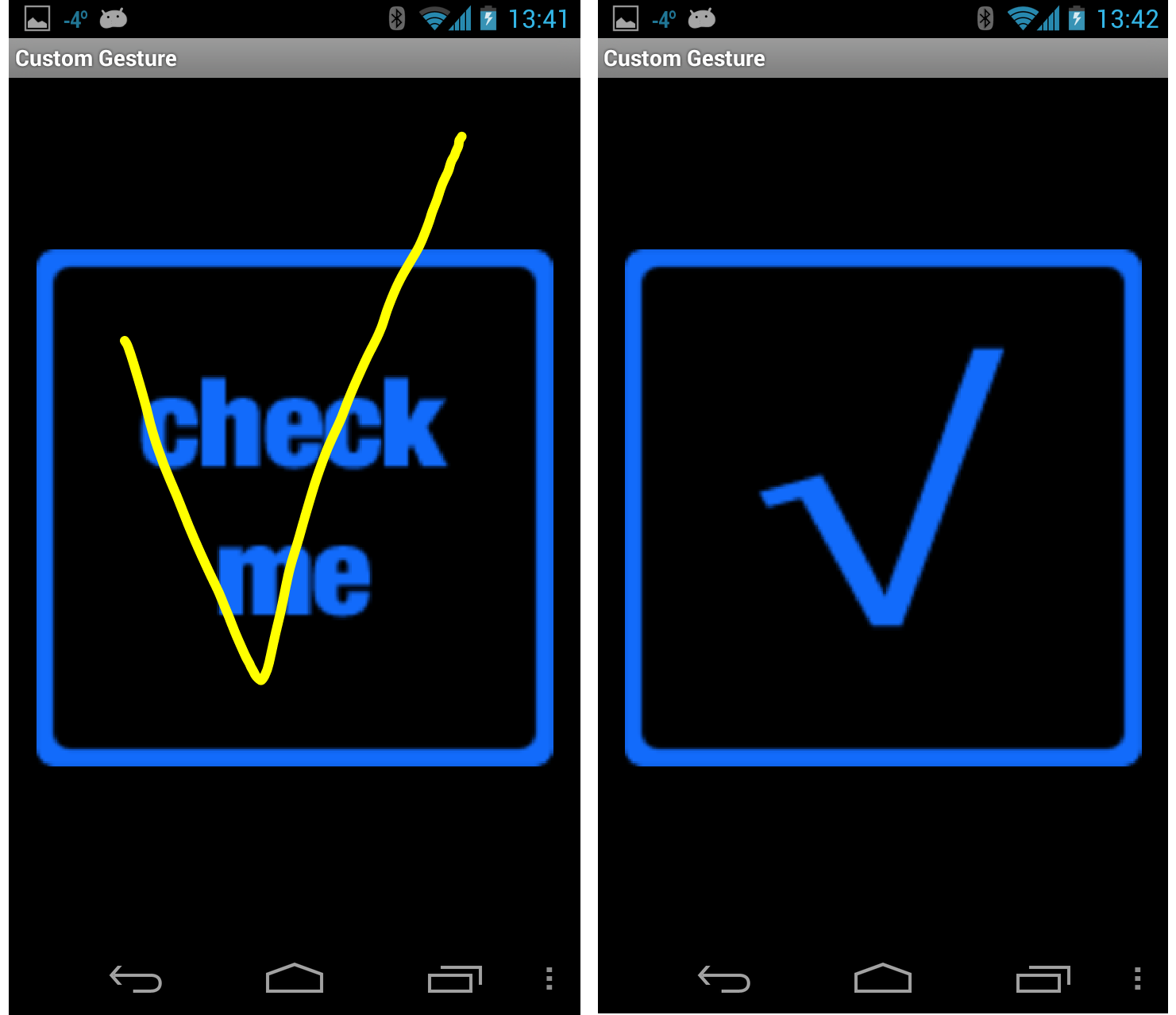

Now draw a checkmark on the screen, and the bitmap being displayed should look something like that shown in the next screenshots:

Finally, draw a scribble on the screen. The checkbox should change back to its original image as shown in these screenshots:

Источник