Gestures and Touch Events

Gesture recognition and handling touch events is an important part of developing user interactions. Handling standard events such as clicks, long clicks, key presses, etc are very basic and handled in other guides. This guide is focused on handling other more specialized gestures such as:

- Swiping in a direction

- Double tapping for zooming

- Pinch to zoom in or out

- Dragging and dropping

- Effects while scrolling a list

You can see a visual guide of common gestures on the gestures design patterns guide. See the new Material Design information about the touch mechanics behind gestures too.

At the heart of all gestures is the onTouchListener and the onTouch method which has access to MotionEvent data. Every view has an onTouchListener which can be specified:

Each onTouch event has access to the MotionEvent which describe movements in terms of an action code and a set of axis values. The action code specifies the state change that occurred such as a pointer going down or up. The axis values describe the position and other movement properties:

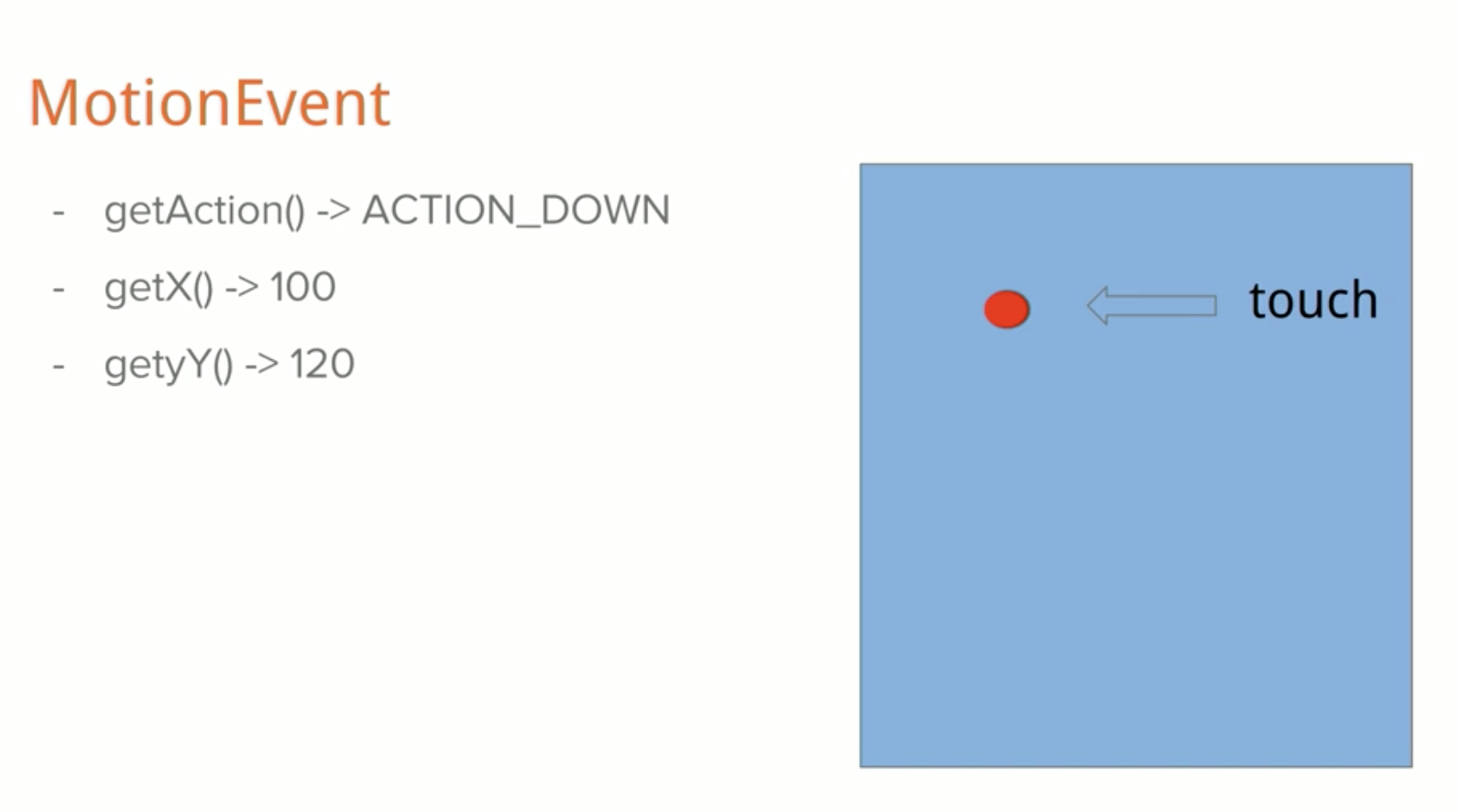

- getAction() — Returns an integer constant such as MotionEvent.ACTION_DOWN , MotionEvent.ACTION_MOVE , and MotionEvent.ACTION_UP

- getX() — Returns the x coordinate of the touch event

- getY() — Returns the y coordinate of the touch event

Note that every touch event can be propagated through the entire affected view hierarchy. Not only can the touched view respond to the event but every layout that contains the view has an opportunity as well. Refer to the understanding touch events section for a detailed overview.

Note that getAction() normally includes information about both the action as well as the pointer index. In single-touch events, there is only one pointer (set to 0), so no bitmap mask is needed. In multiple touch events (i.e pinch open or pinch close), however, there are multiple fingers involved and a non-zero pointer index may be included when calling getAction() . As a result, there are other methods that should be used to determine the touch event:

- getActionMasked() — extract the action event without the pointer index

- getActionIndex() — extract the pointer index used

The events associated with other pointers usually start with MotionEvent.ACTION_POINTER such as MotionEvent.ACTION_POINTER_DOWN and MotionEvent.ACTION_POINTER_UP . The getPointerCount() on the MotionEvent can be used to determine how many pointers are active in this touch sequence.

Within an onTouch event, we can then use a GestureDetector to understand gestures based on a series of motion events. Gestures are often used for user interactions within an app. Let’s take a look at how to implement common gestures.

For easy gesture detection using a third-party library, check out the popular Sensey library which greatly simplifies the process of attaching multiple gestures to your views.

You can enable double tap events for any view within your activity using the OnDoubleTapListener. First, copy the code for OnDoubleTapListener into your application and then you can apply the listener with:

Now that view will be able to respond to a double tap event and you can handle the event accordingly.

Detecting finger swipes in a particular direction is best done using the built-in onFling event in the GestureDetector.OnGestureListener .

A helper class that makes handling swipes as easy as possible can be found in the OnSwipeTouchListener class. Copy the OnSwipeTouchListener class to your own application and then you can use the listener to manage the swipe events with:

With that code in place, swipe gestures should be easily manageable.

If you intend to implement pull-to-refresh capabilities in your RecyclerView, you can leverage the built-in SwipeRefreshLayout as described here. If you wish to handle your own swipe detection, you can use the new OnFlingListener as described in this section.

If you are interested in having a ListView that recognizes swipe gestures for each item, consider using the popular third-party library android-swipelistview which is a ListView replacement that supports swipe-eable items. Once setup, you can configure a layout that will appear when the item is swiped.

Check out the swipelistview project for more details but the general usage looks like:

and then define the individual list item layout with:

Now front will be displayed by default and if I swipe left on an item, then the back will be displayed for that item. This simplifies swipes for the common case of menus for a ListView.

Another more recent alternative is the AndroidSwipeLayout library which can be more flexible and is worth checking out as an alternative.

Supporting Pinch to Zoom in and out is fairly straightforward thanks to the ScaleGestureDetector class. Easiest way to manage pinch events is to subclass a view and manage the pinch event from within:

Using the ScaleGestureDetector makes managing this fairly straightforward.

One of the most common use cases for a pinch or pannable view is for an ImageView that displays a Photo which can be zoomed or panned around on screen similar to the Facebook client. To achieve the zooming image view, rather than developing this yourself, be sure to check out the PhotoView third-party library. Using the PhotoView just requires the XML:

and then in the Java:

Check out the PhotoView readme and sample for more details. You can also check the TouchImageView library which is a nice alternative.

Scrolling is a common gesture associated with lists of items within a ListView or RecyclerView . Often the scrolling is associated with the hiding of certain elements (toolbar) or the shrinking or morphing of elements such as a parallax header. If you are using a RecyclerView , check out the addOnScrollListener. With a ListView , we can use the setOnScrollListener instead.

With Android «M» and the release of the Design Support Library, the CoordinatorLayout was introduced which enables handling changes associated with the scrolling of a RecyclerView . Review the Handling Scrolls with CoordinatorLayout guide for a detailed breakdown of how to manage scrolls using this new layout to collapse the toolbar or hide and reveal header content.

Dragging and dropping views is not particularly difficult to do thanks to the OnDragListener built in since API 11. Unfortunately, to support gingerbread managing drag and drop becomes much more manual as you have to implement it using the onTouch handlers. With API 11 and above, you can leverage the built in drag handling.

First, we want to attach an onTouch handler on the views that are draggable which will start the drag by creating a DragShadow with the DragShadowBuilder which is then dragged around the Activity once startDrag is invoked on the view:

If we want to add «drag» or «drop» events, we should create a DragListener that is attached to a drop zone for the draggable object. We hook up the listener and manage the different dragging and dropping events for the zone:

Check out the vogella dragging tutorial or the javapapers dragging tutorial for a detailed look at handling dragging and dropping. Read the official drag and drop guide for a more detail overview.

Detecting when the device is shaked requires using the sensor data to determine movement. We can whip up a special listener which manages this shake recognition for us. First, copy the ShakeListener into your project. Now, we can implement ShakeListener.Callback in any activity:

Now we just have to implement the expected behavior for the shaking event in the two methods from the callback.

For additional multi-touch events such as «rotation» of fingers, finger movement events, etc., be sure to check out libraries such as Sensey and multitouch-gesture-detectors third-party library. Read the documentation for more details about how to handle multi-touch gestures. Also, for a more generic approach, read the official multitouch guide. See this blog post for more details about how multi-touch events work.

This section briefly summarizes touch propagation within the view hierarchy. There are three distinct touch related methods which will be outlined below:

| Order | Method | Invoked On | Description |

|---|---|---|---|

| 1st | dispatchTouchEvent | A, VG, V | Dispatch touch events to affected child views |

| 2nd | onInterceptTouchEvent | VG | Intercept touch events before passing to children |

| 3rd | onTouchEvent | VG, V | Handle the touch event and stop propogation |

«Order» above defines which of these methods gets invoked first when a touch is initiated. Note that above in «invoked on» A represents Activity , VG is ViewGroup , V is View describing where the method is invoked during a touch event.

Keep in mind that a gesture is simply a series of touch events as follows:

- DOWN. Begins with a single DOWN event when the user touches the screen

- MOVE. Zero or more MOVE events when the user moves the finger around

- UP. Ends with a single UP (or CANCEL) event when the user releases the screen

These touch events trigger a very particular set of method invocations on affected views. To further illustrate, assume a «View C» is contained within a «ViewGroup B» which is then contained within «ViewGroup A» such as:

Review this example carefully as all sections below will be referring to the example presented here.

When a touch DOWN event occurs on «View C» and the view has registered a touch listener, the following series of actions happens as the onTouchEvent is triggered on the view:

- The DOWN touch event is passed to «View C» onTouchEvent and the boolean result of TRUE or FALSE determines if the action is captured.

- Returning TRUE: If the «View C» onTouchEvent returns true then this view captures the gesture

- Because «View C» returns true and is handling the gesture, the event is not passed to «ViewGroup B»‘s nor «ViewGroup A»‘s onTouchEvent methods.

- Because View C says it’s handling the gesture, any additional events in this gesture will also be passed to «View C»‘s onTouchEvent method until the gesture ends with an UP touch event.

- Returning FALSE: If the «View C» onTouchEvent returns false then the gesture is propagated upwards

- The DOWN event is passed upward to «ViewGroup B» onTouchEvent method, and the boolean result determines if the event continues to propagate.

- If «ViewGroup B» doesn’t return true then the event is passed upward to «ViewGroup A» onTouchEvent

In addition to the onTouchEvent , there is also a separate onInterceptTouchEvent that exists only on ViewGroups such as layouts. Before the onTouchEvent is called on any View , all its ancestors are first given the chance to intercept this event. In other words, a containing layout can choose to steal the event from a touched view before the view even receives the event. With this added in, the series of events from above become:

- The DOWN event on «View C» is first passed to «ViewGroup A» onInterceptTouchEvent , which can return false or true depending on if it wants to intercept the touch.

- If false, the event is then passed to «ViewGroup B» onInterceptTouchEvent can also return false or true depending on if it wants to intercept the touch.

- Next the DOWN event is passed to «View C» onTouchEvent which can return true to handle the event.

- Additional touch events within the same gesture are still passed to A and B’s onInterceptTouchEvent before being called on «View C» onTouchEvent even if the ancestors chose not to previously intercept.

The takeaway here is that any viewgroup can choose to implement onInterceptTouchEvent and effectively decide to steal touch events from any of the child views. If the children choose not respond to the touch once received, then the touch event is propagated back upwards through the onTouchEvent of each of the containing ViewGroup as described in the previous section.

As the chart much earlier shows, all of this above behavior of touch and interception is being managed by «dispatchers» via the dispatchTouchEvent method invoked on each view. Revealing this behavior, as soon as a touch event occurs on top of «View C», the following dispatching occurs:

- The Activity.dispatchTouchEvent() is called for the current activity containing the view.

- If the activity chooses not to «consume» the event (and stop propagation), the event is passed to the «ViewGroup A» dispatchTouchEvent since A is the outermost viewgroup affected by the touch.

- «ViewGroup A» dispatchTouchEvent will trigger the «ViewGroup A» onInterceptTouchEvent first and if that method chooses not to intercept, the touch event is then sent to the «ViewGroup B» dispatchTouchEvent .

- In turn, the «ViewGroup B» dispatchTouchEvent will trigger the «ViewGroup B» onInterceptTouchEvent and if that method chooses not to intercept, the touch event is then sent to the «ViewGroup C» dispatchTouchEvent .

- «ViewGroup C» dispatchTouchEvent then invokes the «ViewGroup C» onTouchEvent .

To recap, the dispatchTouchEvent is called at every level of the way starting with the Activity . The dispatcher is responsible for identifying which methods to invoke next. On a ViewGroup , the dispatcher triggers the onInterceptTouchEvent , before triggering the dispatchTouchEvent on the next child in the view hierarchy.

The explanation above has been simplified and abridged for clarity. For additional reading on the touch propagation system, please review this detailed article as well as this doc on ViewGroup touch handling and this useful blog post.

Источник

Android which view is touched

About the content

This content has been published here with the express permission of the author.

Caren Chang explains how setOnClickListener() and setOnLongClickListener() methods work, and examines how view hierarchy is laid out in Android!

Introduction

My motivations for exploring Views in depth started when I worked at June, which made a smart phone app for a smart oven. Writing UI for a smart oven is different from writing UI for the phone because the experience should feel more like an oven as opposed to a phone.

Other apps, such as Periscope, utilize custom touches to create a better user experience. Understanding how Android handles Views and custom gestures will help you implement these features.

How Android Layout Views

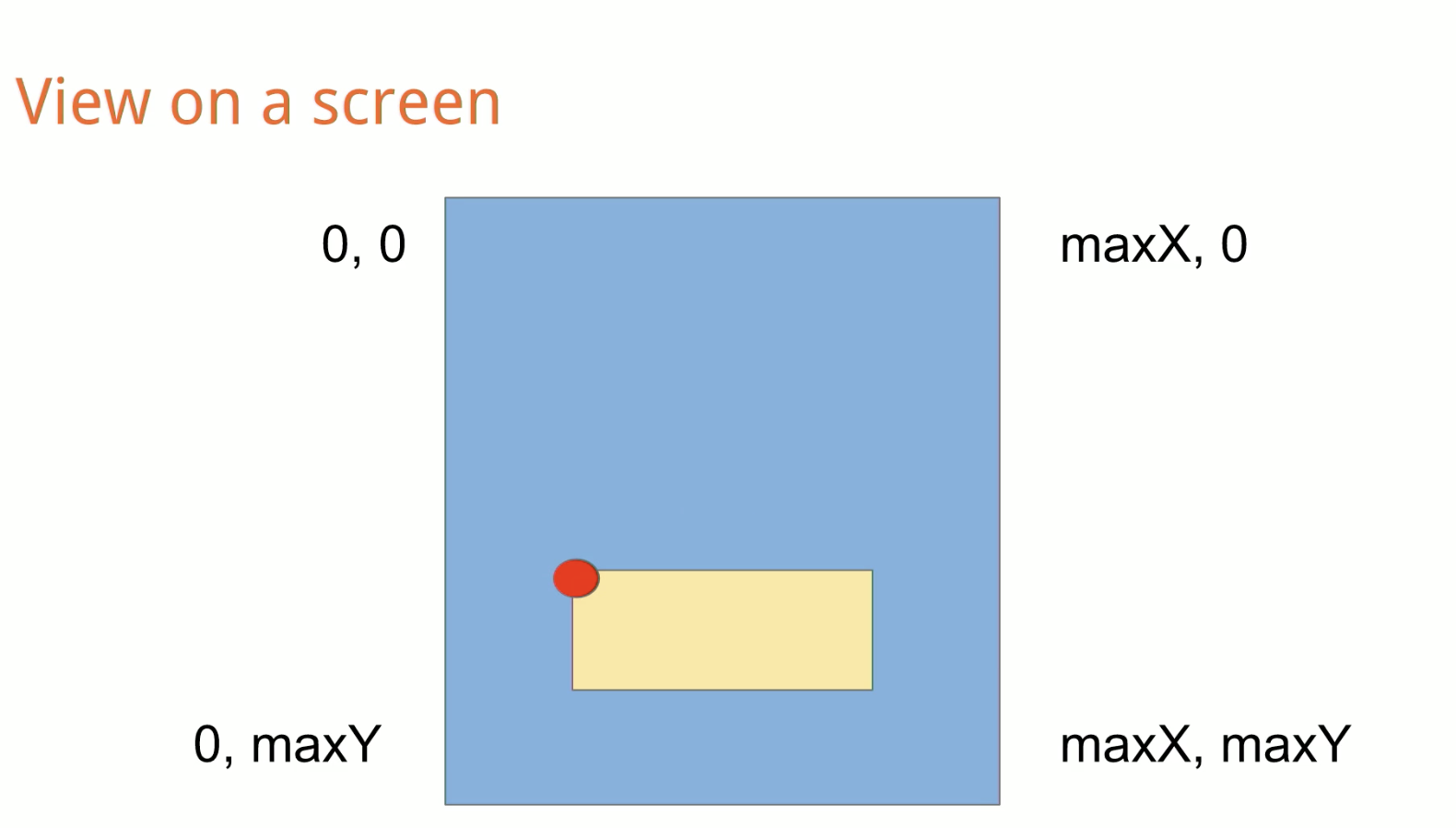

Views drawn on Android do not follow a traditional X, Y coordinate system. A view knows where it is relative to the screen, and where the touches should be sent based on the top left corner, along with the width and height.

When a User Touches a View

Every touch on the screen gets translated into a MotionEvent. The MotionEvent encapsulates an action code and axis values. The action code entails where a user put their finger down, up, or around the screen. Axis values specify where exactly the MotionEvent happened, or where exactly the user touched on the screen.

MotionEvent

Under our example, the blue is the screen and the red dot is where the user touched. This is what it would like as encapsulated MotionEvent:

Get more development news like this

When a touch occurs, Android sends it to the appropriate Views that it thinks should handle the touch. Then Views themselves can decide if they want to handle this MotionEvent or pass it along to another view.

boolean onTouchEvent()

An important method to note is the onTouchEvent . This method is called whenever a touch needs to be handled — it returns true if the view that calls onTouchEvent handles the touch.

Let’s create a custom view that handles these touch events.

When we want to handle different MotionEvents, it’s important to distinguish them. Here, we use a switch statement.

Creating a Custom ‘onClick’ Listener

What constitutes a click is when a user’s finger touches the screen to when they lift their finger.

A click is some certain amount of time before the finger lifts off the screen, and a long click is some certain amount of time longer than that.

We want to measure how long it takes before a user lifts their finger. Based on this, we could just evaluate whether we should show OnClick event or we should do a LongClick event.

The View now has to tell Android that it is interested in these events. You want to return true on onTouchEvent , but this is not ideal. Because when you return true on a touch event, all the other views that are laid on top of this view are not going to get any touch events because it’s all getting intercepted.

Instead, you want to return whether this touch is in the view itself.

Here, the helper method getLocationOnScreen gives the location of the top left corner of the view. Based on that, we can determine whether the MotionEvent was in the interested view. But this was not ideal as well.

Instead, you want to use getRawX, and getRawY instead of get X or get Y. The reason you want to do this is because when MotionEvents are passed down from view to view, the events get translated each time.

Swipe Down to Dismiss

Many apps use the swipe down to dismiss. To implement this, first, you want to detect where the finger started on the screen. We capture the initial Y coordinate so that we can know if the user moved down, to the side, or up.

If the user lifts their finger while it was still in the view, we then calculate the difference between the initial Y and ending Y, and if it’s greater than a specific range, we start the animation to set the view down.

boolean dispatchTouchEvent()

Another method to consider when we do touches and views is the dispatchTouchEvent . dispatchTouchEvent passes a MotionEvent to the target view and returns true if it’s handled by the current view and false otherwise. And it can be used to listen to events, spy on it and do stuff accordingly.

For every MotionEvent, dispatchTouchEvent is called from the top of the tree to the bottom of the tree, which means every single view will call dispatchTouchEvent if nothing else is intercepted.

I created a GitHub project where I logged everything to see what would happen if I did a press. I changed it wherever it returned true, e.g. onTouchEvent, and see how that affected the other views and did the opposite to see the other result. I think the only way to understand how these views and touches work is by playing it by yourself.

Things to Consider When Customizing Behavior

It is very complicated, prone to bugs. Android has given us GestureDetector, which handles most of the common gestures that we would care about, e.g. tap or double tap or scroll.

If you do want to customize stuff, there’s a view configuration class that has a lot of the standard constants that Android uses.

You should consider multitouch. Everything we talked about so far is just considering that we’re using just one finger on the screen.

In Summary

Custom touch events are very complicated. But, on the other hand, all touch events on Android are packaged into a MotionEvent, and it’s passed down the view hierarchy until an interested event wants to handle it. As such, view can be intercepted and redirected based on what you think should happen or what you think will happen.

Next Up: Architecture Patterns in the Realm SDK

About the content

This content has been published here with the express permission of the author.

Caren Chang

Caren is an Android developer working at June, where they currently build an intelligent oven that will help everybody become great cooks. You can find her on twitter at @calren24

Источник