- Face detection and tracking on Android using ML Kit — Part 1

- ML Kit

- Machine Learning for Face Detection in Android

- What is Firebase ML Kit?

- What will we be creating?

- Creating a project on Firebase

- Adding an app

- Configuring AndroidManifest.xml

- Adding a camera to our application

- Configuring the camera

- Capturing an Image

- Starting Detection

- Creating the bounding box

- Conclusion

- Recognize text and facial features with ML Kit: Android

- 1. Introduction

- How does it work?

- What you will build

- What you’ll learn

- What you’ll need

- 2. Getting set up

- Download the Code

- 3. Check the dependencies for ML Kit

- Verify the dependencies for ML Kit

- 4. Run the starter app

- 5. Add on-device text recognition

- Set up and run on-device text recognition on an image

- Process the text recognition response

- Run the app on the emulator

- 6. Add on-device face contour detection

- Set up and run on-device face contour detection on an image

- Process the face contour detection response

- Run the app on the emulator

- 7. Congratulations!

- What we’ve covered

- Next Steps

- Learn More

Face detection and tracking on Android using ML Kit — Part 1

Jan 30, 2019 · 6 min read

At Onfido, we’re creating an open world where identity is the key to access.

Since our goal is to prevent fraud while maintaining a great user experience for legitimate people, we launched advanced facial verification. With this new approach, we ask people to record live videos instead of static selfies. Users record themselves moving their head and talking out loud to prove they are real people.

Our clients adopted this new featu r e. But after a few weeks, we noticed that the videos were hard to process, making identity verification more difficult. Users were not performing the right actions. They would retry multiple times and many eventually gave up. This meant that legitimate users were falling through the cracks.

We needed to find a solution, so over 5 days, we talked to machine learning engineers, biometric specialists, mobile developers, product managers and UX designers. Our goal was to radically improve our selfie video verification.

This series of blog posts will document our process to improve the selfie video feature. You can try it now on our demo app.

To improve our selfie video feature, we decided to tell users if they were doing the actions right. This will lead to less uncertainty from users, and better quality videos.

To start the video recording, we need to make sure the user’s face is inside the screen, so we can be confident that:

a) There is one face in the image

b) This face is completely contained inside the video we are recording, and not cropped

We call this set of guarantees face detection. Once those two conditions are verified, the video recording automatically starts.

Next, on the head turn part, we also want to make sure that users make the right movement. In this case, 2 movements: They must turn their head to the left or right side, and then come back to face the camera. We name this as face tracking.

ML Kit

To detect and track a face in a picture, we used Firebase ML Kit, a tool that tries to simplify bringing machine learning algorithms to mobile devices. When it comes to face detection and tracking, ML Kit gives us the ability to provide pictures to a FirebaseVisionFaceDetector and then receive callbacks with information about the faces in that picture. I won’t get into many details about the tool itself, since Joe Birch already published a great blog post on that.

In our case, we were interested in 2 features that ML Kit offers:

- Face bounding box in a picture, for detection purposes

- Face Euler Y angle, for head turn tracking

We built our own FaceDetector wrapper around this library, which takes care of the detector setup and leverages RxAndroid to expose a method returning an Observable

Источник

Machine Learning for Face Detection in Android

Back in the days, using machine learning capabilities was only possible over the cloud as it required a lot of compute power, high-end hardware etc… But mobile devices nowadays have become much more powerful and our Algorithms more efficient. All this has led to on-device machine learning a possibility and not just a science fiction theory.

On device machine learning is being used everywhere nowadays, such as:

- Smart Assistants: Such as Cortana, Siri, Google Assistant. Google assistant received a major update in this year’s Google IO and a major area of focus was to increase on-device machine learning capabilities.

- Snapchat Filters: Snapchat uses Machine Learning to detect human faces and apply 3-D filters like glasses, hats, dog filters etc.

- Smart Reply: Smart reply is an on-device capability included in chat applications such as whatsapp etc… with the aim of providing a pre-built message template to send in response to a message.

- Google Lens: Although in it’s nascent stage, google lens uses machine learning models to identify and label objects.

And the list can go on and on. So, in this post we’ll take a look at how to use machine learning capabilities in our android apps and make them smarter.

What is Firebase ML Kit?

Firebase ML Kit is a mobile SDK that makes it easier for mobile developers to include machine learning capabilities in their applications. It consists of the following pre-built APIs:

- Text Recognition: To recognize and extract text from images.

- Face Detection: To detect faces and facial landmarks along with contours.

- Object Detection and Tracking: To detect, track and classify objects in camera and static images.

- Image Labelling: Identify objects, locations, activities, animal species, and much more.

- Barcode scanning: Scan and process barcodes.

- Landmark recognition: Identifying popular landmarks in an image.

- Language ID: To detect the language of the text.

- On-Device Translation: Translating text from one language to another.

- Smart Reply: Generating textual replies based on previous messages.

Apart from that one can use their custom image classification machine learning models (.tflite models) using AutoML.

ML Kit is basically a wrapper over the complexities of including and using machine learning capabilities in your mobile app.

What will we be creating?

We’ll be using Face Detection capability of ML Kit to detect faces in an image. We’ll be capturing the image via our camera and running an inference over it.

To use ML Kit, it is necessary to have a Firebase account. If you don’t already have one, go ahead and create an account: https://firebase.google.com

Creating a project on Firebase

Once you’ve created an account on firebase, go to https://console.firebase.google.com and click on add project.

Give your project a name and click on “Create”. It can take a few seconds before your project is ready. Next, we’ll go ahead and add an android app to our project.

Adding an app

Open android studio and start a new project with an empty activity.

Then click on Tools -> Firebase. This will open the firebase assistant on the right hand panel. From the list of options, select ML Kit and click on “Use ML Kit to detect faces in images and video”.

It would prompt you to connect your studio to an app in firebase. Click on connect and sign in to your firebase project.

Once you permit Android Studio to access your firebase project, you’ll be prompted to select a project. Choose the one your created in the previous step.

After that you’ll need to add ML Kit to your app which is a one click setup from assistant. Once it’s done adding dependencies, your ready to go ahead and create your application.

Configuring AndroidManifest.xml

You’ll need to modify your androidmanifest.xml to enable offline machine learning in your android app.

Add the following meta-tag in directly below your application tag.

Also, we’ll be adding a camera feature in our application, hence add a permission tag in manifest.

Adding a camera to our application

To add the camera to our application we’ll be using WonderKiln’s CameraKit. To use camerakit, add the following dependencies in you app level build.gradle file:

Note: The kotlin dependencies are to be included if you use kotlin in your android app.

Sync the project and your dependencies will be downloaded.

Next, we’ll be adding a CameraKitView in our activity_main.xml layout file. Add the following code in your layout file:

Also add a button to the bottom of the screen to capture images.

Configuring the camera

We’ll be initializing the camera in our MainActivity.kt file. For this, we’ll override some lifecycle methods and within these methods, we’ll call the lifecycle methods for our camera:

Starting from Android M, we need to ask for runtime permissions. This is handled by WonderKiln’s CameraKit library itself. Just override the following method:

Capturing an Image

We’ll capture an image on button press and send the image to our detector. Then we’ll be applying the machine learning model to the image to detect faces.

Set an onclicklistener to the button and inside the onClick function, call the captureImage function of Camera View.

We’ll be decoding the byteArray into a bitmap and then scaling the bitmap to a reasonable size. Essentially, the size of our camera_view.

Now it’s time to finally run our detector over the bitmap. For this, we create a separate function runDetector().

Starting Detection

This is the meat of the application. We’ll be running a detector over our input image. First, create a FirebaseVisionImage from the captured bitmap.

Then it’s time to add some options such as performanceMode, countourMode, landmarkMode etc…

Now, we’ll create our detector using these options.

Finally, it’s time to start detection. Using the detector, call the detectInImage function and pass in the image. We will get callbacks for success and failure.

This is how the runDetector function would look like:

Creating the bounding box

In order to create a bounding box on the detected faces, we’ll need to create two views. First is an overlay transparent view on which we will draw the box. Next is the actual box.

Here’s the GraphicOverlay.java file:

Here is the actual rectangle box:

It’s time to add this graphicOverlay view in our activity_main.xml layout file:

This is how our activity_main.xml file finally looks like:

Now it’s time to add the bounding box around the detected faces. Using the detector, we receive the faces in our processFaceResult() function.

Iterate over all the faces and add a box over each face as such:

Conclusion

This is how our application looks like at the end.

You can download the source code for this application on github: https://github.com/Ayusch/mlkit-facedetection.git

Play around with it and let me know what innovative ideas were you able to come up using machine learning in your android applications.

*Important*: Join the AndroidVille SLACK workspace for mobile developers where people share their learnings about everything latest in Tech, especially in Android Development, RxJava, Kotlin, Flutter, and mobile development in general.

Like what you read? Don’t forget to share this post on Facebook, Whatsapp, and LinkedIn.

You can follow me on LinkedIn, Quora, Twitter, and Instagram where I answer questions related to Mobile Development, especially Android and Flutter.

If you want to stay updated with all the latest articles, subscribe to the weekly newsletter by entering your email address in the form on the top right section of this page.

Источник

Recognize text and facial features with ML Kit: Android

1. Introduction

ML Kit is a mobile SDK that brings Google’s machine learning expertise to Android and iOS apps in a powerful yet easy-to-use package. Whether you’re new or experienced in machine learning, you can easily implement the functionality you need in just a few lines of code. There’s no need to have deep knowledge of neural networks or model optimization to get started.

If you run into any issues (code bugs, grammatical errors, unclear wording, etc.) as you work through this codelab, please report the issue via the Report a mistake link in the lower left corner of the codelab.

How does it work?

ML Kit makes it easy to apply ML techniques in your apps by bringing Google’s ML technologies, such as the Mobile Vision, and TensorFlow Lite, together in a single SDK. Whether you need the real-time capabilities of Mobile Vision’s on-device models, or the flexibility of custom TensorFlow Lite models, ML Kit has you covered.

This codelab will walk you through creating your own Android app that can automatically detect text and facial features in an image.

Are you an iOS developer? Check out the iOS specific version of this codelab.

What you will build

In this codelab, you’re going to build an Android app with ML Kit. Your app will:

- Use the ML Kit Text Recognition API to detect text in images

- Use the ML Kit Face Contour API to identify facial features in images

What you’ll learn

- How to use the ML Kit SDK to easily add advanced Machine Learning capabilities such as text recognition and facial feature detection

What you’ll need

- A recent version of Android Studio (v3.0+)

- Android Studio Emulator or a physical Android device

- The sample code

- Basic knowledge of Android development in Java

- Basic understanding of machine learning models

This codelab is focused on ML Kit. Non-relevant concepts and code blocks are glossed over and are provided for you to simply copy and paste.

2. Getting set up

Download the Code

Click the following link to download all the code for this codelab:

Download source code

Unpack the downloaded zip file. This will unpack a root folder ( mlkit-android-master ) with all of the resources you will need. For this codelab, you will only need the resources in the vision subdirectory.

The vision subdirectory in the mlkit-android-master repository contains two directories:

starter—Starting code that you build upon in this codelab.

final—Completed code for the finished sample app.

3. Check the dependencies for ML Kit

Verify the dependencies for ML Kit

The following lines should already be added to the end of the build.gradle file in the app directory of your project (check to confirm):

build.gradle

These are the specific ML Kit dependencies that you need to implement the features in this codelab.

4. Run the starter app

Now that you have imported the project into Android Studio and checked the dependencies for ML Kit, you are ready to run the app for the first time. Start the Android Studio emulator, and click Run (

The app should launch on your emulator. At this point, you should see a basic layout that has a drop down field which allows you to select between 3 images. In the next section, you will add text recognition to your app to identify text in images.

5. Add on-device text recognition

In this step, we will add the functionality to your app to recognize text in images.

Set up and run on-device text recognition on an image

Add the following to the runTextRecognition method of MainActivity class:

MainActivity.java

The code above configures the text recognition detector and calls the function processTextRecognitionResult with the response.

Process the text recognition response

Add the following code to processTextRecognitionResult in the MainActivity class to parse the results and display them in your app.

MainActivity.java

Run the app on the emulator

Now click Run (

Your app should now look like the image below, showing the text recognition results and bounding boxes overlaid on top of the original image.

Photo: Kai Schreiber / Wikimedia Commons / CC BY-SA 2.0

Congratulations, you have just added on-device text recognition to your app using ML Kit! On-device text recognition is great for many use cases as it works even when your app doesn’t have internet connectivity and is fast enough to use on still images as well as live video frames.

6. Add on-device face contour detection

In this step, we will add functionality to your app to recognize the contours of faces in images.

Set up and run on-device face contour detection on an image

Add the following to the runFaceContourDetection method of MainActivity class:

MainActivity.java

The code above configures the face contour detector and calls the function processFaceContourDetectionResult with the response.

Process the face contour detection response

Add the following code to processFaceContourDetectionResult in the MainActivity class to parse the results and display them in your app.

MainActivity.java

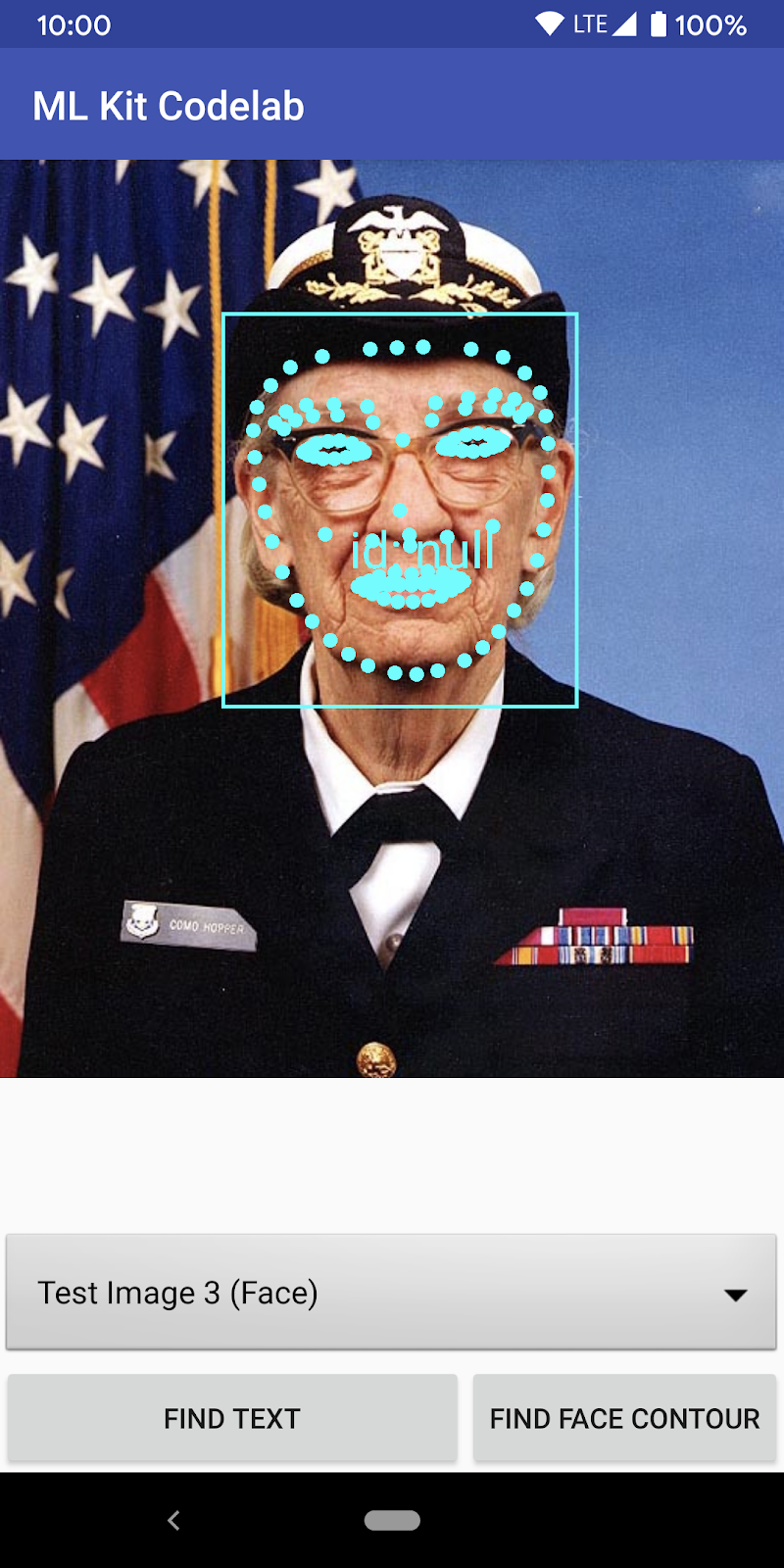

Run the app on the emulator

Now click Run (

Your app should now look like the image below, showing the face contour detection results and showing the contours of the face as points overlaid on top of the original image.

Congratulations, you have just added on-device face contour detection to your app using ML Kit! On-device face contour detection is great for many use cases as it works even when your app doesn’t have internet connectivity and is fast enough to use on still images as well as live video frames.

7. Congratulations!

You have successfully used ML Kit to easily add advanced machine learning capabilities to your app.

What we’ve covered

- How to add ML Kit to your Android app

- How to use on-device text recognition in ML Kit to find text in images

- How to use on-device face contour in ML Kit to identify face features in images

Next Steps

- Use ML Kit in your own Android app!

Learn More

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License, and code samples are licensed under the Apache 2.0 License. For details, see the Google Developers Site Policies. Java is a registered trademark of Oracle and/or its affiliates.

Источник

starter—Starting code that you build upon in this codelab.

starter—Starting code that you build upon in this codelab.