- Unreal выпустила приложение для iPhone, которое захватывает мимику лица для Unreal Engine

- При помощи iPhone X сделали полную систему захвата движения тела в реальном времени

- How to do 3D motion capture using the latest iPhone camera

- iPhone facial motion capture

- Up Your Game with Better Facial Mocap New in v1.1

- 1 Highest Tracking Precision (Free App)

- Face Cap — Motion Capture 4+

- FBX Facial motion capture

- niels jansson

- Screenshots

- Description

- What’s New

- Ratings and Reviews

- Pretty good. Has trouble with “w”

- Great opportunity, Awful execution

- Developer Response ,

- Great help in our production pipeline

- App Privacy

- Data Not Collected

Unreal выпустила приложение для iPhone, которое захватывает мимику лица для Unreal Engine

Студия Unreal выпустила приложение для iOS, которое считывает лицевую мимику пользователя и анимирует её на персонаже для Unreal Engine в режиме реального времени. Оно получило название Live Link Face; по словам разработчиков, приложение предназначено как для профессиональных съёмок методом motion capture, так и для любительских, например, для стриминга, сообщается на сайте Unreal.

Live Link Face уже доступно для скачивания в App Store. Приложение использует технологию дополненной реальности ARKit и систему камер TrueDepth в iPhone X и новее, включающую в себя фронтальную камеру, инфракрасную камеру, проектор точек и инфракрасный излучатель. Полученные с помощью них данные приложение передаёт на ПК, которые обрабатывает их и использует для построения анимации мимики лица.

Приложение передаёт данные с iPhone на ПК через многоадресную сеть, которая позволяет синхронизироваться одновременно с несколькими устройствами. Для этого оно использует технологию Tentecle Sync, которая позволяет синхронизировать устройства с одним устройством, которое ведёт учёт времени съёмки. Кроме того, в Live Link Face есть поддержка протокола OSC (Open Sound Control), с помощью которого пользователи смогут начать запись на нескольких iPhone одним касанием.

Разработчики обещают, что набор функций приложения весьма широк и позволяет использовать в различных сценариях. Так, например, оно поможет стримерам, которые предпочитают показывать свои цифровые аватары на трансляции, поскольку Live Link Face может выполнять естественную настройку и учитывать вращения головы без необходимости надевать специальное оборудование для motion capture.

Unreal не упоминает, разрабатывается ли аналогичное приложение для Android. Как пишет Engadget, это может быть связано с большим количеством различных настроек фронтальных камер, которые можно увидеть в смартфонах от разных компаний. Кроме того, пока неясно, будет ли работать приложение на iPhone без технологии TrueDepth.

Студия отметила, что продолжит делать Unreal Engine более доступным, назвав это одной из своих приоритетных целей. По словам разработчиков, выпуск Live Link Face — один из шагов к этому, позволяющий сделать технологию motion capture более простой для создателей контента.

Источник

При помощи iPhone X сделали полную систему захвата движения тела в реальном времени

В сети появился видеоролик, на котором разработчик из компании Kite & Lightning продемонстрировал всем, придуманную собственноручно систему для захвата лицевой анимации при помощи камеры от iPhone X.

Парень провел уже третий тест системы захвата движения. Как сообщает , на этот раз он прикрепил телефон к шлему DIY mocap, а также использовал специальный костюм, чтобы помимо лица, захватить также и все тело и воссоздать компьютерного персонажа из игры Bebylon: Battle Royale. Начинались тесты с того, что сначала разработчик просто при помощи фронтальной камеры iPhone X только записывал и переделывал свое лицо. Однако теперь ему удалось завершить свою работу и воссоздать движения игрового персонажа полностью при помощи дополнительного iPhone X.

Как сообщил парень в комментарии под видеороликом, занимается этим он в свое свободное время, для того, чтобы найти быстрый способ полного захвата движений (лицо и тело), для разрабатываемой компанией Kite & Lightning игры Bebylon: Battle Royale. Пользователи на YouTube в свою очередь, всегда высоко оценивают каждый ролик автора, заявляя, что он проделывает действительно огромную работу и его изобретение выглядит очень впечатляющим.

Источник

How to do 3D motion capture using the latest iPhone camera

New third-party apps and creative methods allow you to create something far removed from the world of Animoji & Memoji.

It’s been a few years since the release of the iPhone X introduced users to a TrueDepth camera and facial recognition software; with the release of the XR and iPhone 11 Pro, third-party app makers and creators have had more incentives to explore the possibilities of TrueDepth. This will be of interest to any artists wondering if they can unlock the capabilities of their iPhone’s AR-toolkit for motion capture work, and produce something more substantive than a nodding chicken head to send to their friends.

Anyone wondering if body capture work is possible with the iPhone can try a technique pioneered by Cory Strassburger, co-founder of LA-based cinematic VR studio Kite & Lightning. Using an iPhone X in tandem with Xsens inertial motion capture technology, Cory shows you can produce simultaneous full-body and facial performance capture, with the final animated character live streamed, transferred and cleaned via IKINEMA LiveAction to Epic Games’ Unreal Engine. This is all done in total real time, as the below video demonstrates.

The method relies on a DIY mocap helmet with an iPhone X directed at the user’s face, an Xsens MVN system and IKINEMA LiveAction to stream and retarget the motion to your character of choice in Unreal Engine. Via this setup, users can act out a scene wherever they are, as Cory demonstrate at 2018’s Siggraph convention.

Those interested in facial capture work meanwhile can have a go with Live Face, an app which featured on our sister site Macworld. Realised by Reallusion, this free app feeds facial tracking data directly in real time to a Mac or PC, using your iPhone as a hotspot, and connecting up to your computer and tracking data points on the face via Wi-Fi.

It’d be wise to note though that the end data is received and processed by Reallusion’s CrazyTalk Animator 3 suite; the app is currently only compatible with this software, meaning anyone without it will have to shell out £84.99/$89.99 on the App store if interested.

Another option for facial capture is CV-AR from Maxon, a free app also released in June that’s compatible with the grandstand that is Cinema 4D. The software captures your facial animation and sends it to C4D, textures and sound included, with the data stored locally on your iPhone inside the app itself.

The app is designed to make the capture and transfer of facial animation as seamless and effortless as possible; transfers are made possible by scanning a QR code, so there are no hotspot or USB options with this one.

A free sister-app has joined its side as of March 2020 in the form of Moves by Maxon, which enables iPhone X/XR and 11 Pro users to capture facial motion and whole body movement; enabling artists to bring motion sequences into Cinema 4D with limited technical effort.

A separate plugin is required to transfer the captured data from your Apple device to Cinema 4D and can be downloaded for free from the Cineversity website. This plugin is only compatible with the latest version of Cinema 4D — Release 2, or later.

A more low-key release is Face Cap from solo developer Niels Jansson. This one is very interesting as its output can work with not only C4D, but also Lightwave 3D, Autodesk Maya, Houdini and Blender, putting its competitors to shame (insert blush-face emoji).

With Face Cap you can record 50 different facial expressions for a recording duration of up to 10 minutes. It exports generic mesh work, blendshapes and animation as Ascii-fbx, and offers a native IOS sharing interface so you can email or Dropbox your recordings.

Lastly, let’s have a little look at something different — the Animation for iPhone X service from France’s Polywink.

Polywink is an online platform for 3D facial animation that aims to save studios and 3D professionals time and budget by automatically generating blendshapes and rigs. In other words, it’s an outsourcing service, with its iPhone option allowing you to upload a neutral 3D head model and receive a character head ready to be animated.

The service automatically generates a set of 51 blendshapes as adapted to the specific topology and morphology of your character. It closely follows ARKit documentation, meaning you can plug your model into the ARKit Unity Plugin and let the iPhone’s face tracking do the rest; no additional rigging and modelling is required. The service will set you back $299.00 per head, and promises a 24 hour turnaround.

Источник

iPhone facial motion capture

LIVE FACE Profile

iPhone Gear Profile For Motion LIVE Plug-in

*Compatible with Motion LIVE 2D. More >

iPhone Face Motion Capture App

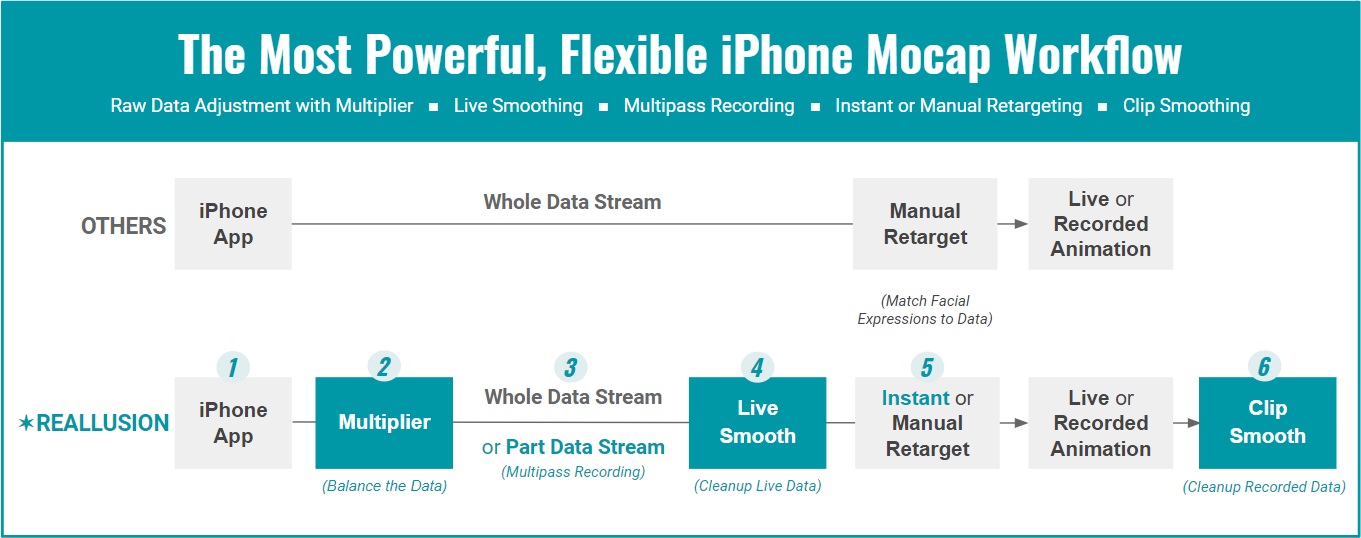

Up Your Game with Better Facial Mocap New in v1.1

Designed by animators, for animators — Reallusion’s latest facial mocap tools go further, addressing core issues in the facial mocap pipeline to produce better animation. Starting from all new ARKit expressions, to easily adjusting raw mocap data as well as retargeting, to multipass facial recording and essential mocap cleanup — we’re covering all the bases to provide you with the most powerful, flexible and user friendly facial mocap approach yet.

The biggest issue with all live mocap is that it’s noisy, twitchy and difficult to work with, so smoothing the live data in realtime is a big necessity; because if the mocap data isn’t right, or the the model’s expressions aren’t what you want, and the current mocap recording isn’t quite accurate then you need to have access to the backend tools to adjust all of this. Finally, while you can smooth the data in realtime from the start, you can also cleanup existing recorded clips using proven smoothing methods which can be applied at the push of a button.

1 Highest Tracking Precision (Free App)

- Unmatched tracking accuracy and stability.

- Alleviate unwanted lips or neck jitters.

*update required: LIVE FACE App version 1.0.8 (Feb 22, 2021)

Источник

Face Cap — Motion Capture 4+

FBX Facial motion capture

niels jansson

-

- 4.4 • 89 Ratings

-

- Free

- Offers In-App Purchases

Screenshots

Description

Facial motion capture is now a lot more easy.

Record your performance and export the result to FBX or TXT.

The FBX export contains mesh, blendshapes and animation data.

The TXT export contains just animation data.

Additional features:

* Import your own custom avatars (From Blender or Maya).

* Calibrate the capture data.

* Video recording for reference.

* Filter and smooth the recorded data.

* Send blendshape parameter data over Wifi with OSC.

* Lock the head in place.

* Hide the head during recording.

Face Cap does not collect any personal information.

http://www.bannaflak.com/face-cap/privacy/index.html

What’s New

Video capture bug resolved.

Ratings and Reviews

Pretty good. Has trouble with “w”

I only have the free version right now. I can’t quite justify $35 for the full version. I have an iPhone Xs. It seems to struggle a bit with closed mouth shapes. Maybe the Xs cam is a bit different. But overall really cool. I’ll probably get it if there’s another update for Xs.

Great opportunity, Awful execution

The app is a great opportunity for developers to try easily integrate higher quality facial animations into their 3D software or game engine.

Well, it would be if the prices wasn’t outrageous. $75? . I think the developer doesn’t understand his/her audience, and just wants money. But if he/she was smarter, the realization would come that this is a low quality app, with impressive technology, that any experiences developer can make themselves quickly.

The UI is ugly and not clear (even though there are only a few buttons) and the facial motion detection is not overly accurate. If this developer was serious about making money, then they should charge $2-7 for the app MAX. Even the Zbrush-clone apps on the App Store cost less than this, and those are far more complex.

I’ll just connecting my phone to unity and programming the rest. This app was close to being amazing but SURPRISE SURPRISE, indie’s don’t want to pay $75 for low quality and options, and companies are just going to use something professional

Developer Response ,

The app can be evaluated for free, including the export functionality. Pricing is based on investments made for development, maintenance and support.

Great help in our production pipeline

We have been using this for basic lip sync capture for one of our series and have gotten good results. We created a script in Maya to help polish the original capture to where we have a very usable output with only a little cleanup. What is cool is that the voice artist can use the app to capture the performance during recording because it runs on an iPhone. This is a great advantage.

App Privacy

The developer, niels jansson , indicated that the app’s privacy practices may include handling of data as described below. For more information, see the developer’s privacy policy.

Data Not Collected

The developer does not collect any data from this app.

Privacy practices may vary, for example, based on the features you use or your age. Learn More

Источник