- VSyncs or Vertical Sync — Never heard of it or did you?

- VSync is a fascinating subject in itself. Many hardcore gamers would have heard about it. I am not one of those gamers, and I saw VSync for the first time when I was reviewing App Vitals in the google play store console. Then I started to read about it. I have tried to explain the whole idea behind it(which is independent of android) before jumping into android context.

- 1. Frame Rate

- 2. Refresh Rate

- Frame rate > Refresh Rate

- Frame rate But, modern display systems are adaptive in nature, when they see that the incoming frame’s frequency is less they reduce the refresh rate accordingly.

- Vertical Synchronization or VSync

- Ping-Pong Buffering — It’s good to know about it.

- VSync — The Android Side of Story

- Post-Android 4.1 — Project Butter & Inception of VSync

- Choreographer

- VSync and app performance

- Что такое VSYNC в android

- 2 ответа

- What is Vsync and why should you use it (or not)

- Input: Understanding refresh rate

- Output Part 1: Understanding frames per second

- Output Part 2: The frame grinder

- Swapping the buffers

- Enter Vertical Synchronization

- Triple buffering: the best of both worlds?

- Should you use Vertical Sync?

VSyncs or Vertical Sync — Never heard of it or did you?

VSync is a fascinating subject in itself. Many hardcore gamers would have heard about it. I am not one of those gamers, and I saw VSync for the first time when I was reviewing App Vitals in the google play store console. Then I started to read about it. I have tried to explain the whole idea behind it(which is independent of android) before jumping into android context.

Sep 10, 2020 · 9 min read

T his post will discuss the fundamentals needed to understand VSync and how this is relevant to android developers, particularly in optimizations. Someone smart once said — “ Knowing beyond abstractions never does any harm.” That person is me. Ahh! I know it’s a bad joke. Let’s start.

Before we get started on this, we need to understand 2 things correctly.

1. Frame Rate

First, what is a frame, and what determin e s the frame rate? A frame is a single still image, which is then combined in a rapid slideshow with other still images, each one slightly different, to achieve the illusion of natural motion. The frame rate is how many of these images are displayed in one second.

Once these frames are drawn, these are passed to the display hardware through ports(This can also be a limiting factor in bringing down the butteriness of UI, we will not discuss this now). This brings to another concept,

2. Refresh Rate

Once the images are sent to the hardware, how fast it is able to refresh the screen with the new set of frames received. So, the refresh rate is the rate at which the screen hardware refreshes the screen.

As these terms are related to 2 different sets of hardware, it is very likely that the rates may differ in numbers. To understand it clearly, let us examine each case.

Frame rate > Refresh Rate

Logically, it means that the GPU is producing frames at a higher rate than the display hardware can consume it. When the monitor displays anything on the screen it reads the frame from an area of memory called the framebuffer.

When the display hardware has not completed drawing one frame and GPU overwrites the framebuffer with other upcoming frames, we see a part of first frame and a part of the second frame on the screen. Which is called screen tear.

We all have faced this problem while playing games like counter strike where we move our gun and a tear like the above image happens. Now you know, why it happens and the game’s high frame rate is the culprit. The below diagram shows what happens off the scenes.

We can see that the frame new frame is pushed while another frame is still in the process of rendering on the screen.

Frame rate But, modern display systems are adaptive in nature, when they see that the incoming frame’s frequency is less they reduce the refresh rate accordingly.

Now that we have understood the fundamentals, let us try to understand how the VSync works and how it tries to solve the problem.

W henever we encounter a rate-limiting problem, the obvious solutions that come to the mind are Backpressure, Buffering, and Dropping. We will examine each possibility now.

A. Can we drop frames? NO. Dropping is not an option as we are going to miss what the game engine is rendering.

B. Can we use backpressure to limit the frames generated by the GPU? NO, we cannot. The frame rate will not be governed by refresh rates. Solution discarded.

C. The last solution is buffering. So yes VSync solves this problem using a mechanism called Double Buffering[Will talk about it later in this post. Hold on!]

Vertical Synchronization or VSync

It is a mechanism that synchronizes the frame rate with the refresh rate of the display hardware. The below diagram shows what we want to achieve here.

It makes a rule that before the current refresh cycle is completed GPU will not copy any new frames to the frame buffer.

Ok, so what is represented as a “ buffer” in the above diagram actually is a double buffer( it can be three sometimes called triple buffering used to eliminate the pain points of double buffering). So, what is the purpose of 2 buffers?

One buffer is used by GPU where it writes the new frames. It is called a back buffer and the frame-buffer used previously is now called a front buffer. So the rule is:

“Frames will moved from back to front buffer only when the current refresh cycle of the display hardware is completed.” Which makes sure that you see a smooth UI.

Now, what can go wrong? There is still a catch. While playing games which demand quick responses to onscreen events because the GPU already has two or three frames rendered and stored in the buffer beyond what you’re seeing onscreen at any given moment.

That means that while the GPU is rendering images in direct response to your actions, there is a miniscule delay (measured in milliseconds) between when you perform those actions and when they actually appear onscreen. We all have faced this right? We do something and the actual response to that action appears after sometime. VSync should be taking the blame! Let’s solve this using Ping-Pong Buffering.

Ping-Pong Buffering — It’s good to know about it.

We saw that there is a minuscule amount of delay using VSync while playing immersive games. To reduce that delay, hardware designers came up with a new concept of Ping-Pong Buffering.

Ping-pong buffering doesn’t have the same input lag; rather than a straight frame buffer which just backs up excess frames and feeds them to the monitor one at a time, this method of vertical synchronization actually renders multiple frames in video memory at the same time and flips between them every time your monitor requests a new frame. This kind of “page flipping” eliminates the lag from copying a frame from the system memory into video memory, which means there’s less input lag. This can be compared to the pre-fetching concept. Fetch the data in advance and show it at the right time.

There is one more way for VSyncing ie Triple Buffering, it is a little complex than the other two. We can skip that for now(This is used internally in android.)

Now that we understand the whole story behind VSync, we will now start analyzing how they come into the picture in android and app optimization.

VSync — The Android Side of Story

V ertical synchronization was introduced in android in Jelly Bean 4.1 as a part of Project Butter to improve the UI performance and responsiveness. As per the official “about” page of Jelly Bean —

“Everything runs in lockstep against a 16 millisecond vsync heartbeat — application rendering, touch events, screen composition, and display refresh — so frames don’t get ahead or behind.”

Let’s see what problem haunted android before 4.1 which project butter attempted to solve.

Before Vsync is introduced, there was no synchronization happening for input, animation, and draw. As and when input came it was handled, when there was animation or change in view then it was handled then and there which resulted in too many CPU operations it was difficult to handle input events when there was some animation going on.

As there was no sync happening between these 3 operations, so redraws happened on input handling as well as on animation and for changes in the view also.This created a problem as it exhausted the CPU cycles, input processing was not smooth.

Post-Android 4.1 — Project Butter & Inception of VSync

VSync is an event posted periodically by the android Linux kernel at a fixed interval where the input handling, animation, and window drawing happens synchronously in a standard order.

VSync signals are delivered at an interval of 16.66 ms which equates to 60fps. Input handling, animation, and window drawing happens on the arrival of this signal . If input events arrive before the VSync signal they are queued. After this animation is handled and then UI redraws are done.

Now, we know from where this term 16ms frame came into the picture and why android developers talk about it while looking at app optimization. This signal is received by the Choreographer class which some of you might have heard. We will cover that in detail in some other post if required.

Choreographer

For now, it is just an abstraction that receives timing pulses(Vsync) from the lower sub-system and delivers the commands to the higher sub-systems to render the upcoming frames.

Each thread which has a looper will have a separate Choreographer instance. Conclusively, each HandlerThread instance will also have it own Choreographer.

VSync and app performance

We know that apps are locked with 16.66ms timing pulses, so what if your app does something which lasts more than 16ms? You have guessed it right, it will miss the VSync signals and it would in turn lead to multiple performance-related issues. Choreographer class has a callback onVsync() which handles the Vsync signals.

As a good android citizen, our apps should finish our rendering work within 16.66 ms, and they should let the main thread do 3 things which it is meant for.

- Input Handling

- Drawing/Redrawing UI

- Running smooth animations

The problems can be app-specific but some of the operations which could lead to your app miss the timing pulses are:

Loading heavy classes eagerly at the app startup even when they are not required at that time. Use lazy initialization there.

Doing encoding/decoding bitmaps on the main thread.

Loading big shared preferences on the main thread. Android does a good amount of caching here, but still devs can mess up here.

When doing repetetive operations avoid allocation of unneccessary objects, such as in onDraw() of View.

Main thread incurs a lot of cost in inflating if the XML UI has a deep hierarchy. Use relative layouts or constraint layout to flatten that.

If you miss the Vsync then the choreographer class keeps track of the last frame that came while the main thread was busy in heavy work using an integer mFrame. It gets overridden every time a new frame comes in. Once the main thread is free, it renders the last frame as the first thing in the next Vsync. It keeps track of the frame number through mFrame integer and calculates how many frames were missed while the main thread was busy. You would have seen the below message sometimes on the logcat. Yes, it comes from the Choreographer.

There are various benchmarks against which we should be profiling.

- Input latency — 24ms

- Operations on the main thread — 8ms

- Bitmap upload to GPU [Using big resources] — 3.2ms

- Uploading draw commands to GPU — 12ms

This information is available if we take a look at the flame chart of the android profiler. Hovering over the data shows the amount of time it took to execute on the particular thread and we can also navigate to the respective block of code which is executing there and examine the problems.

Источник

Что такое VSYNC в android

Это объясняет VSYNC , но темп очень быстрый, и я не удалось найти другой хороший ресурс, чтобы разобраться в этой теме.

Я понимаю следующее:

VSYNC происходит каждый раз 16ms , а все компоненты кадра INPUT ANIMATION LAYOUT RECORD DRAW & RENDER происходят только 1 раз за это время, поэтому с VSYNC визуализация кадра синхронизируется, и это ограничивает перерисовку кадра в указанное время.

Пожалуйста, помогите мне, правильно ли это понимание или нет.

2 ответа

VSYNC — это вертикальная синхронизация. Это термин, общий для телевизоров, мониторов, дисплеев и т. Д. Вы можете в основном думать об этом как о частоте обновления, о том, как часто дисплей действительно обновляется. Дисплей может обновлять только сигнал VSYNC, поэтому изменения на дисплее в основном группируются до следующего VSYNC.

Этот термин пришел из старых школьных телевизоров, где VSYNC фактически менял по одной строке сверху вниз. Вот почему на некоторых старых ламповых телевизорах вы могли видеть полосу изменений, движущуюся вниз по экрану.

VSYNC — сигнал синхронизации. Он синхронизирует конвейер отображения. Конвейер отображения содержит отрисовку приложений и дополнительные атрибуты для отображения изображения.

Этот сигнал синхронизации VSYNC запускается на основе FPS (кадров в секунду), настроенного для отображения. Предположим, что дисплей настроен на 60 кадров в секунду , т. Е. 60-кратное отображение будет обновляться новыми кадрами в секунду. Таким образом, сигнал VSYNC срабатывает через каждые 16,66 мс (1/60 с).

Источник

What is Vsync and why should you use it (or not)

We need PC games to be perfect. After all, we’re dumping loads of cash into the hardware so we can get the most immersive experience possible. But there’s always some type of hiccup, whether it’s a fault in the game itself, issues stemming from hardware, and so on. One glaring problem could be screen “tearing,” a graphic anomaly that seemingly stitches the screen together using ripped strips of a photograph. You’ve seen a game setting called “Vsync” that supposedly fixes this issue. What is Vsync and should you use it? We offer a simplified explanation.

If you’re rather new to PC gaming, we first look at two important terms you should know to understand why you may need Vsync. First, we will cover your monitor’s refresh rate followed by the output of your PC. Both have everything to do with the screen-ripping anomaly. Some of this will be slightly technical so you’ll understand why the anomaly happens in the first place.

Input: Understanding refresh rate

The first half of the equation is your display’s refresh rate. Believe it or not, it’s currently updating what you see multiple times per second, though you likely can’t see it. If your display didn’t update (or refresh), then all you’d see is a static image. What an expensive picture frame!

But that’s no fun, right? You need to see movement even at the most basic level. If you’re not watching video or playing games, the display still needs to update so you can see where the mouse cursor moves, what you’re typing, and so on.

Refresh rates are defined in hertz, a measurement of frequency. If you have a display with a 60Hz refresh rate, then it refreshes each pixel 60 times per second. If your panel does 120Hz, then it can refresh each pixel 120 times per second. Thus, the higher the updates each second, the smoother the experience.

The goal of a high refresh rate is to reduce the common motion blurring problem associated with LCD and OLED panels. Actually, you are part of the problem: Your brain predicts the path of motion faster than the display can render the next image. Increasing the refresh rate helps but typically other technologies are required to minimize the blur.

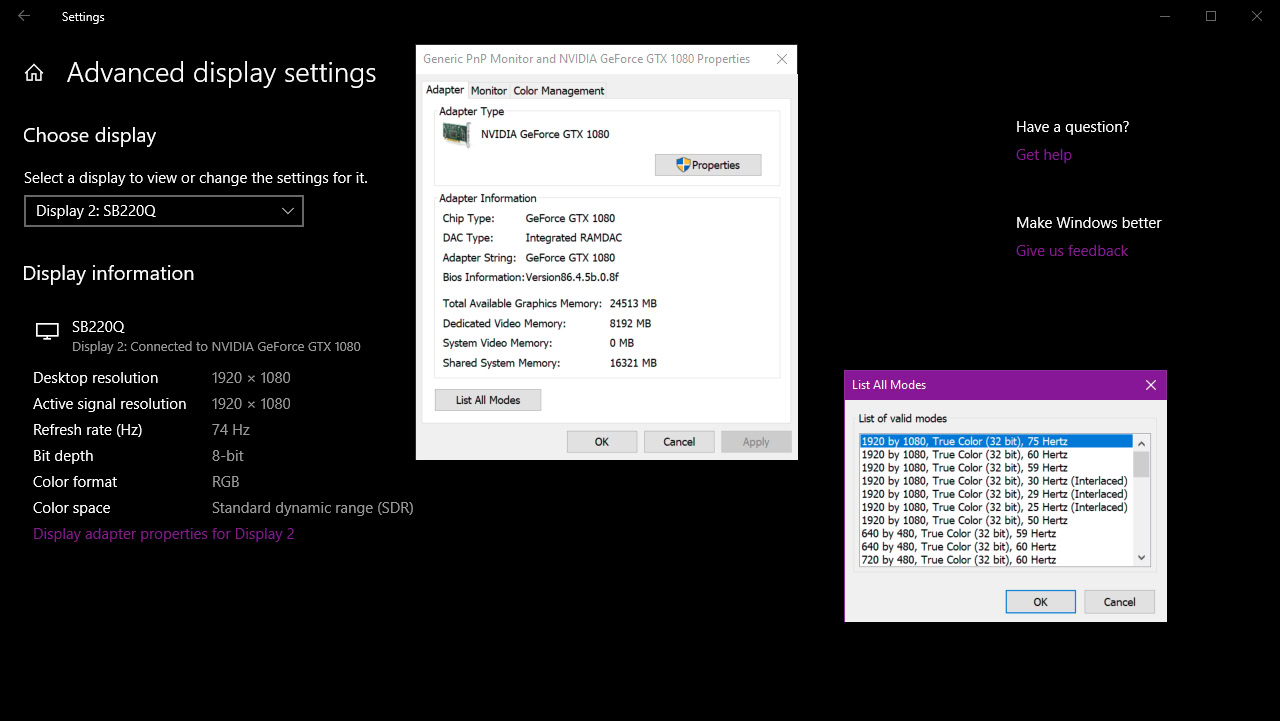

Modern mainstream desktop displays typically have a resolution of 1,920 x 1,080 at 60Hz. The Acer displays we used to write this article has a weird 75Hz refresh rate at that resolution, though it’s adjustable to 60Hz and lower. Our Alienware sports a higher 120Hz refresh rate at 2,560 x 1,440.

You can view your display’s current refresh rate in Windows 10 by following these steps:

- Right-click anywhere on the desktop.

- Select Display settings.

- Scroll down and select Advanced display settings.

- Select Display adapter properties.

- If you have more than one connected display, select a display in the drop-down menu under Choose display. After that, click the Display adapter properties for Display # link.

Knowing your display’s refresh rate capabilities and resolutions is important in gaming. For instance, your display may have a maximum resolution of 3,840 x 2,160, but it can only hit 30Hz at that resolution. If you crank the screen down to 1,920 x 1,080, you could see a higher 60Hz refresh rate if not more.

Now let’s follow your HDMI, DisplayPort, DVI, or VGA cable back to the source: Your gaming PC.

Output Part 1: Understanding frames per second

This is the other half of the equation. Movies, TV shows, and games are nothing more than a sequence of images. There’s no actual movement involved. Instead, these images trick your brain into perceiving movement based on the contents of each image, or frame.

Movies and TV shows in North America typically run at 24 frames per second, or 24Hz (or 24fps). This rate became standard in Hollywood to accommodate sound starting with The Jazz Singer in 1927. It was also a means of keeping the overall budget at a manageable level due to film stock prices.

That said, we’ve grown accustomed to the low framerate even though our eyeballs can see 1,000 frames per second or more. Movies and TV shows are designed to be an escape from reality, and the low 24Hz rate helps preserve that dream-like state.

Higher framerates, as seen with The Hobbit trilogy shot at 48Hz, jarringly moves close to real-world motion. In fact, live video jumps up to 30Hz or 60Hz, depending on the broadcast. James Cameron initially targeted 60Hz with Avatar 2 but dropped the rate down to 48Hz.

Gaming is different. You don’t want that dream-like state. You want immersive, fluid, real world-like action because, in your mind, you’re participating in another reality. A game running at 30 frames per second is tolerable, but it’s just not liquid smooth. You’re fully aware that everything you do and see is based on moving images, killing the immersion.

Jump up to 60 frames per second and you’ll feel more connected with the virtual world. Movements are fluid, like watching live video. The illusion gets even better if your gaming machine and display can handle 120Hz and 240Hz. That’s eye candy right there, folks.

Output Part 2: The frame grinder

Your PC’s graphics processing unit, or GPU, handles the rendering load. Since it cannot directly access the system memory, the GPU has its own memory to temporarily store graphics-related assets, like textures, models, and frames.

Meanwhile, your CPU handles most of the math: Game logic, artificial intelligence (NPCs, etc), input commands, calculations, online multiplayer, and so on. System memory temporarily holds everything the CPU needs to run the game (scratch pad) while the hard drive or SSD stores everything digitally (file cabinet).

All four factors – GPU, CPU, memory, and storage – play a part in your game’s overall output. The goal is for the GPU to render as many frames as possible per second. Again, ideally that number is 60. The higher the frame count, the better the visual experience.

That said, output largely depends on the hardware and software environment. For instance, while your CPU handles everything needed to run the game, it’s also dealing with external processes required to run your computer. Temporarily shutting down some of these processes helps, but generally you want a super-fast CPU, so Windows 10 isn’t interfering with gameplay.

Other elements affect output: A slow or fragmented drive, slow system memory, or a GPU that just can’t handle all the action at a specific resolution. If your game runs at 10 frames per second because you insist on playing at 4K, your GPU is most likely the bottleneck. But even if you have more than you need to run a game, the on-screen action handled by both the GPU and CPU may be momentarily overwhelming, dropping the framerate. Heat is another framerate killer.

The bottom line is that framerates fluctuate. This fluctuation stems from the rendering load, the underlying hardware, and the operating system. Even if you toggle an in-game setting that caps the framerate, you may still see fluctuations.

Swapping the buffers

So let’s breathe for a moment and recap. Your display – the input – draws an image multiple times per second. This number typically does not fluctuate. Meanwhile, your PC’s graphics chip – the output – renders an image multiple times per second. This number does fluctuate.

The problem with this scenario is an ugly graphics anomaly called screen tearing. Let’s get a little technical to understand why.

The GPU has a special spot in its dedicated memory (VRAM) for frames called the frame buffer. This buffer splits into Primary (front) and Secondary (back) buffers. The current completed frame resides in the Primary buffer and is delivered to the display during the refresh. The Secondary (back) buffer is where the GPU renders the next frame.

Once the GPU completes a frame, these two buffers swap roles: The Secondary buffer becomes the Primary and the former Primary now becomes the Secondary. The game then has the GPU render a new frame in the new Secondary buffer.

Here’s the problem. Buffer swaps can happen at any time. When the display signals that it’s ready for a refresh and GPU sends a frame over the wire (HDMI, DisplayPort, VGA, DVI), a buffer swap may be underway. After all, the GPU is rendering faster than the display can refresh. As a result, the display renders part of the first completed frame stored the old Primary, and part of the second completed frame in the new Primary.

So if your view changed between two frames, the on-screen result will show a fractured scene: The top showing one angle and the bottom showing another angle. You may even see three strips stitched together as seen in Nvidia’s sample screenshot shown above.

This screen tearing is mostly noticeable when the camera moves horizontally. The virtual world seemingly separates horizontally like invisible scissors cutting up a photograph. It’s annoying and pulls you out of the immersion. However, because images are registered vertically, you won’t see tearing up and down the screen.

Enter Vertical Synchronization

You can reduce screen tearing with a software solution called Vertical Synchronization (Vsync, V-Sync). It’s a software solution provided in games as a toggle. It prevents the GPU from swapping buffers until the display successfully receives a refresh. The GPU essentially becomes a slave to the display and must idle until it’s given the greenlight to swap buffers and render a new image.

In other words, the game’s framerate won’t go higher than the display’s refresh rate. For instance, if the display can only do 60Hz at 1,920 x 1,080, Vsync will lock the framerate at 60 frames per second. No more screen tearing.

But there’s a side effect. If your PC’s GPU can’t keep a stable framerate that matches the display’s refresh rate, you’ll experience visual “stuttering.” That means the GPU is taking longer to render a frame than it takes the monitor to refresh. For example, the display may refresh twice using the same frame while it waits for the GPU to send over a new frame. Rinse and repeat.

As a result, Vsync will drop the game’s framerate to 50 percent of the refresh rate. This creates another problem: lag. There’s nothing wrong with your mouse, keyboard, or game controller. It’s not an issue on the input side. Instead, you’re simply experiencing visual latency.

Why? Because the game acknowledges your input, but the GPU is forced to delay frames. That translates to a longer period between your input (movement, fire, etc) and when that input appears on the screen.

The amount of input lag depends on the game engine. Some may produce large amounts while others have minimal lag. It also depends on the display’s maximum refresh rate. For instance, if your screen does 60Hz, then you may see lag up to 16 milliseconds. On a 120Hz display you could see up to 8 milliseconds. That’s not ideal in competitive games like Overwatch, Fortnite, and Quake Champions.

Triple buffering: the best of both worlds?

You may find a triple buffering toggle in your game’s settings. In this scenario, the GPU uses three buffers instead of two: One Primary and two Secondary. Here the software and GPU draw in both Secondary buffers. When the display is ready for a new image, the Secondary buffer containing the latest completed frame changes to the Primary buffer. Rinse and repeat at every display refresh.

Thanks to that second Secondary buffer, you won’t see screen tearing given the Primary and Secondary buffers aren’t swapping while the GPU delivers a new image. There’s also no artificial delay as seen with double-buffering and Vsync turned on. Triple buffering is essentially the best of both worlds: The tear-free visuals of a PC with Vsync turned on, and the high framerate and input performance of a PC with Vsync turned off.

Should you use Vertical Sync?

Yes and no. The overall problem boils down to preference. Screen tearing can be annoying, yes, but is it tolerable? Does it ruin the experience? If you’re viewing little amounts and it’s not an issue, then don’t bother with Vsync. If your display has a super-high refresh rate that your GPU can’t match, chances are you’ll likely not see tearing anyway.

But if you need Vsync, just remember the drawbacks. It will cap the framerate either at the display’s refresh rate, or half that rate if the GPU can’t maintain the higher cap. However, the latter halved number will produce visual “lag” that could hinder gameplay.

Of course, there are other solutions that address screen tearing that we’ll cover in other articles. These include hardware-based solutions for displays provided by Nvidia (G-Sync) and AMD (FreeSync). Other alternatives supporting monitors with variable refresh rates include Enhanced Sync (AMD GPUs only), Fast Sync (Nvidia GPUs only), Adaptive VSync (Nvidia GPUs only), and VESA’s Adaptive Sync (AMD, Nvidia). Intel’s upcoming discrete graphics cards will supposedly support Adaptive Sync as well.

Источник